Google has become synonymous with powerful search, incredible hardware, and quirky, fun technology. Unfortunately, that includes stretching the limits of privacy and a reputation for giving up on its product lines too soon. But these negatives notwithstanding, Google is at it again at its Google I/O event near its company headquarters in Mountain View, Calif., enticing developers and consumers alike with a number of new hardware products, software and services.

Yes, Google just revealed new Pixel phones, including the Pixel 6A and the Pixel 7. But those weren’t the coolest technologies Google showed off on Wednesday. The stuff below is even cooler.

(And for more coverage, check out our stories on Google’s new privacy controls, the new Pixel Watch, and the new Maps’ Immersive Mode.)

Immersive View in Google Maps

Google Maps began life as a two-dimensional representation of streets and highways. Over time, Google Maps has added traffic (as reported by Google Phones), Google Earth (as recorded by satellites and low-flying planes), and Google Street View (imagery from cars and cameras). Now, Google has started putting it all together with Immersive View for Maps. Immersive View layers actual imagery on top of simulated buildings it creates itself.

Immersive View is the next generation of the 3D perspective that’s already in your Android phone—try zooming in on a major city, then tapping the “Layers” icon in the upper-right-hand corner, then the “3D” control… and you’ll see it’s pretty awful. It’s a sea of ghostly images superimposed on your phone’s screen at only a certain zoom level. But Immersive View looks like it will bring color and life back to the 3D world of Google Maps.

Ironically, there’s a decent version of Immersive View already available. Try opening the Maps application on your Windows 10/11 PC, zoom in on a city, then select the small angled grid.

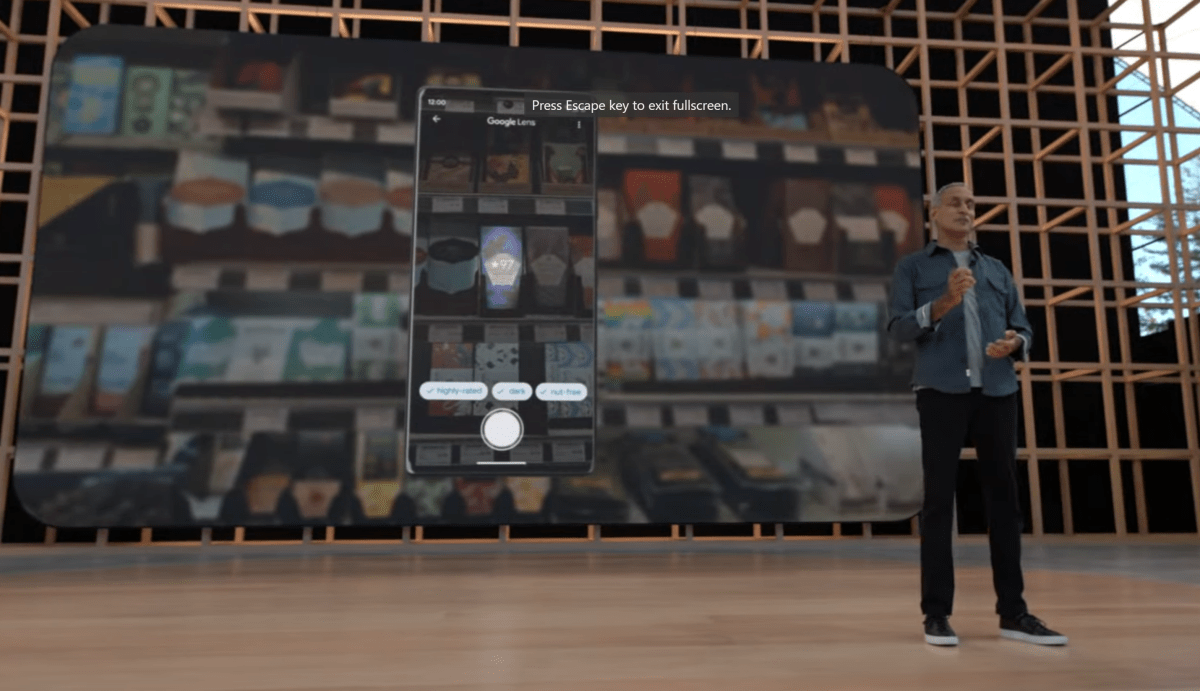

Google Search via Scene Exploration

Scene Exploration is the next iteration of Google Search, mimicking how you yourself visually search. Imagine walking through a grocery store, with your eyes scanning the shelves. On some level you know what those objects are, and possibly their relative worth and what their quality is.

That’s how Scene Exploration will work: You’ll pan your smartphone camera over a scene, and Google will scan the various items and ping the Web for further information. The idea is that you’ll approach the scene with a filter in mind: scanning a shelf full of wine, for example, to find a well-rated vintage or a chardonnay that was made by a Black-owned winery.

Google Search via Scene Exploration

Unfortunately, Google didn’t announce a timeframe on when Scene Exploration will become a reality.

Google Docs TL:DR Mode

Some of us have learned how to read and process information very quickly. Others not so much. And others simply don’t have the time to scrub through a story, let alone a couple hours of a YouTube video.

Using machine learning, automated summarization (or TL:DR Mode) will automatically pull out the key points of a document, providing a short summary of what’s being discussed. What Google showed off at Google I/O has incredible potential, though you might be a bit leery of running your company’s latest sales strategy document threw Google’s AI. And while summarization is going to come to “other products within Google Workspace,” it will only be available for chat capabilities at first, “providing a helpful digest of chat conversations.” Expect TL:DR mode to coordinate with Google’s automated transcription and translation services, which are being added to Google Meet.

Will TL:DR Mode ever be better than a curated executive summary? And will it work on PDF files? We’re excited and intrigued, but still a bit wary. And there’s no word yet on when this feature will roll out.

Is Google Glass back?

When Google killed Google Glass seven years ago, PCWorld wrote that it was down, not out. Apparently we were more prescient than we knew.

Google showed off an unnamed augmented-reality prototype at Google I/O with either extremely limited capabilities or an extremely focused perspective—how you see it is up to you. Either way, the new prototype (marked with PROTO-15 on the side of one demonstration model) is strictly focused on communication. Google Glass, with its focus on photos, video, and facial recognition, flopped hard. But the new Glass 2.0 simply listens for the voice of the person you’re speaking with and projects a transcript of the conversation on the inside of the glass screens.

Google positioned this new Glass with examples of an immigrant mother and daughter who spoke different languages, and of a man who spoke Spanish but no English. It’s hard to say what, or, if, these glasses will be, or if they’ll come to market. But even a “limited” version of Google Glass 2.0 will have utility. (A photo of what the new Glass might look like is at the very top of this article.)

Pixel Watch

When Google bought Fitbit last year, you could be forgiven for thinking the eventual fate of the popular activity tracker might be a repeat of Intel’s botched Basis buy. But Google appears to be serious about its acquisition, announcing and showing off the Pixel Watch after months of leaks and speculation. Fitbit technology will be baked right in.

The Pixel Watch will debut later this year, when Google will announce features like its price, battery life, and so on. On Wednesday, Google showed off features such as sleep tracking and continuous heart monitoring—table stakes for activity trackers that debuted years ago. To be fair, the company has yet to announce the full breadth of the Pixel Watch’s capabilities. We know, too, that Google intends that its smartwatch be more than just an exercise monitor, with payment and even home-control functions built in.

Samsung and Apple are the dominant players in smartwatches, with Fitbit and others providing more fitness-oriented bands. Can Google manage to pull off a unified device? We’ll have to wait until the Pixel Watch formally launches.