OpenAI said Tuesday that it has released an AI “classifier” for identifying AI-authored text written by AI like its own ChatGPT. The problem? ChatGPT is pretty good at evading OpenAI’s new tool.

ChatGPT has absolutely overwhelmed academia, where students are using it as a virtual assistant of sorts in a variety of tasks. Unfortunately, some students are crossing the line and using it to create content that they are passing off as original—cheating, in other words. The trouble is trying to determine which answers were written by a human, and which by an AI.

OpenAI’s Classifier tool has one weakness, though: It’s somewhat easily fooled. In a press release, OpenAI said that the classifier identified 26 percent of AI-authored text as authentically human, and deemed 9 percent of text written by a human as AI-authored. In the first case, that meant that OpenAI’s tool would, on average, fail to catch about a quarter of those who used AI and failed to disclose it.

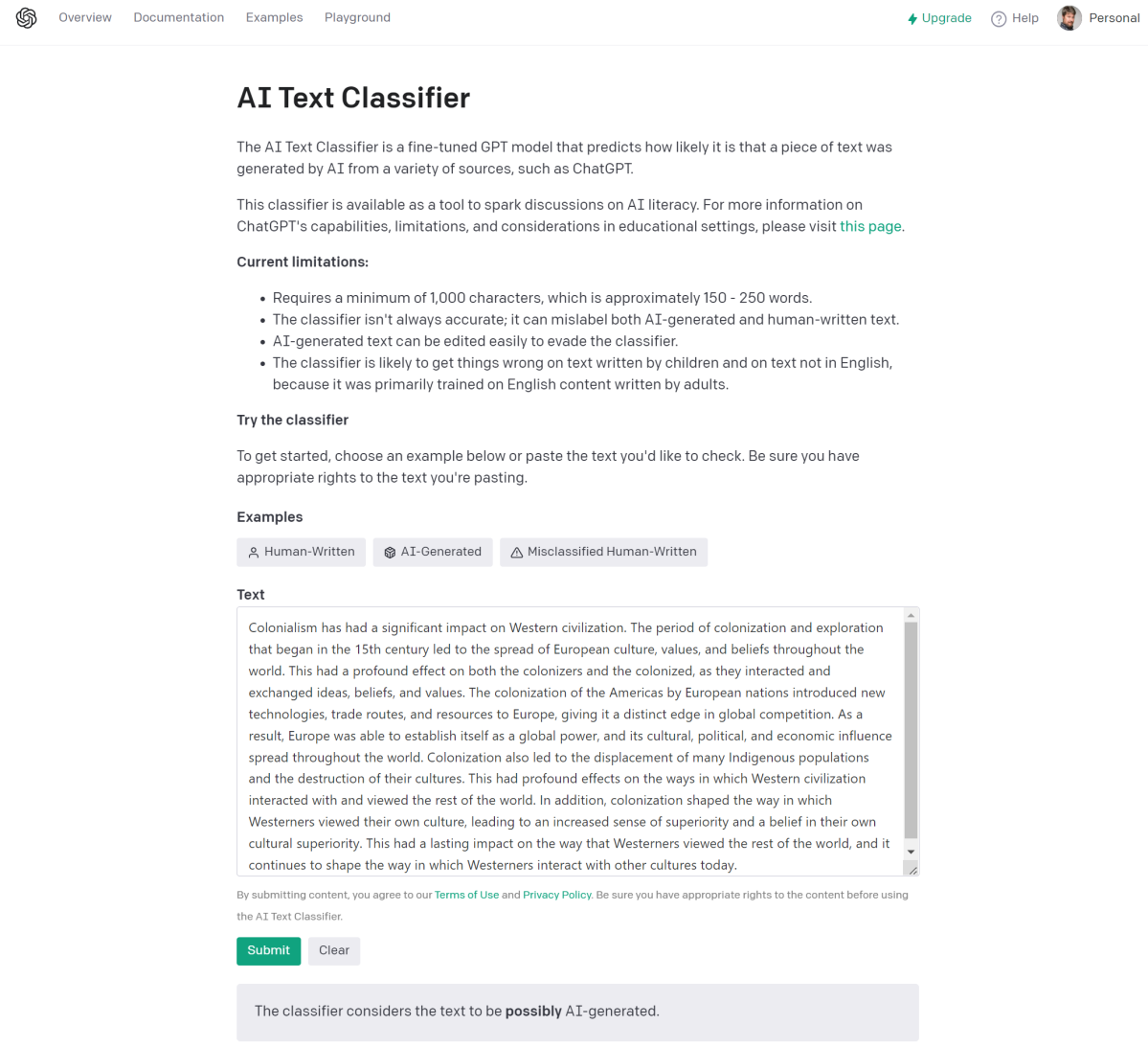

Unfortunately, the classifier also comes with a number of caveats. First, the more words, the better: Anything less than 1,000 characters and the tool has a good chance of getting it wrong, OpenAI said. Second, for now it only works with English, and absolutely can’t determine if code has been written by an AI or a human. Finally, AI-authored text can be edited to evade the classifier, OpenAI said.

“Our classifier is not fully reliable,” OpenAI says in its press release, in bold print.

In a quick test, the AI classifier couldn’t tell whether a random passage from The Catcher in the Rye by J.D. Salinger was AI-authored, but said that a similar passage from Lewis Carroll’s Alice in Wonderland was “very unlikely” to be AI generated. The classifier also passed a random article authored by our executive editor Brad Chacos (good job, boss). Unfortunately, ChatGPT was down when we initially tried to access it, but emerging search engine You.com, which integrates its own AI chatbot, served as another test platform.

You.com differs from ChatGPT in that it (now) cites its sources, so a command, “Write an explanation of how colonialism has shaped Western civilization” cited Wikipedia, Britannica.com, and an honors seminar at the University of Tennessee in its YouChat chatbot. OpenAI’s classifier identified it as “possibly AI-generated.” The classifier generated the same result for an AI-generated short story about an emu who could fly.

Later, though, we were able to generate a similar response to our question of colonialism on ChatGPT. “The classifier considers the text to be unclear if it is AI-generated,” the classifier concluded.

A similar fiction test query on ChatGPT, “Write a short story about a dog who builds a rocket and flies to the moon,” also generated the same “unclear” response.

Our conclusion: Not only does OpenAI’s tool generate wishy-washy conclusions, it’s not enough to determine which results are AI-generated.

Forget OpenAI, and try Hive Moderation

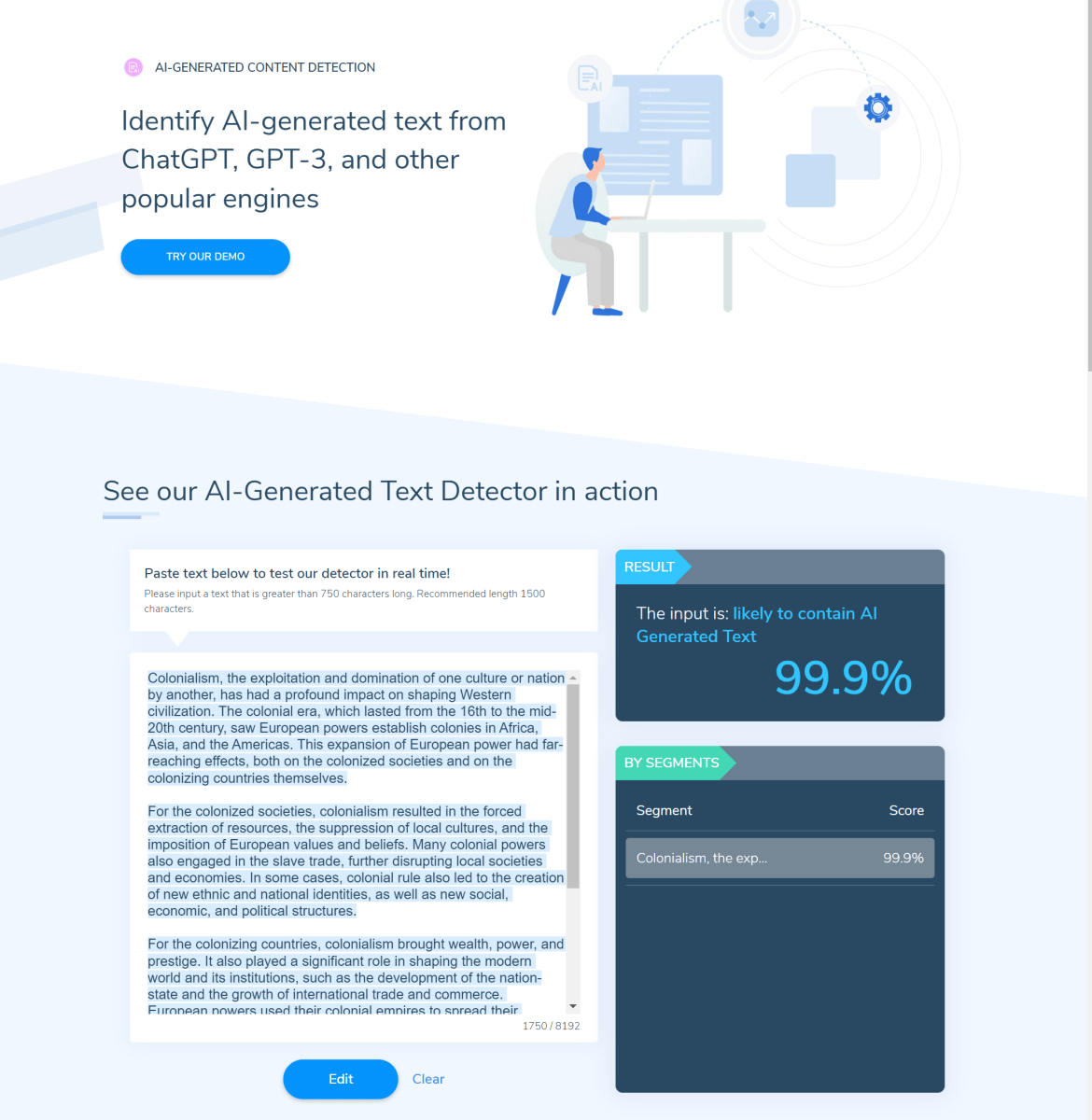

Interestingly, OpenAI isn’t the only game in town when it comes to AI detection. An ML engineer at Hive AI created Hive Moderation, a free AI detection tool which performs the same function—only this one seems to work. For one, Hive Moderation returns a confidence percentage, or the likelihood that the text sample contains AI text.

In our test of the colonialism question above, (with answers from both ChatGPT and You.com) Hive Moderation said it was “likely to contain AI text,” with a rock-solid 99.9 percent confidence score. The short story about the dog and his rocket generated the same 99.9 percent likelihood, too. Hive Moderation also gave a 0% likelihood that the Alice in Wonderland and Catcher in the Rye snippets were AI-generated, as you’d expect. The Nvidia story on PCWorld.com also passed muster, with an absolute 0 percent confidence that it was authored by an AI.

We even tried this prompt in ChatGPT: “Write an explanation of how colonialism has shaped Western civilization, but do it in a way that it’s not obvious an AI wrote it.” Nope—Hive Moderation caught that too.

In fact, in every test that we threw at it, Hive Moderation was absolutely confident in which samples were AI generated, and which were absolutely right. So while OpenAI’s “classifier” may be noteworthy because of its OpenAI lineage, it appears that Hive Moderation may be the early front-runner in detecting which text is authored by an AI, and which is not.