We all know Google as a powerhouse for search results when we’re entering text into a search engine, but the substantial improvements to Google Lens announced at Google I/O today effectively transform the AI service into a real-time search engine for the world around us—all with the help of on-board camera apps.

Here’s what we’re looking forward to the most.

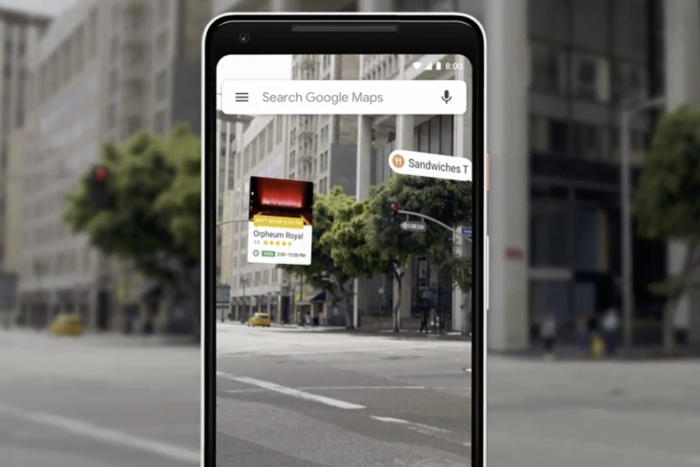

Augmented reality integration with Google Maps

Sometimes, especially in unfamiliar cities, it’s hard to tell which direction you’re facing, even when Google Maps shows you right where you’re standing.

Google

Google

It’s also a good way to check out reviews for nearby places.

Soon, though, you’ll be able to activate your camera in Google Maps and see helpful contextual information for the scene you’re looking at in the real world. Simply raise your camera, aim it at a scene, and you’ll see information about various businesses around you, as well as the names of the streets. Not only will this make it easier to gather your bearings, but it may even help you discover fun new restaurants you otherwise wouldn’t have noticed—even though they’re only a few steps away.

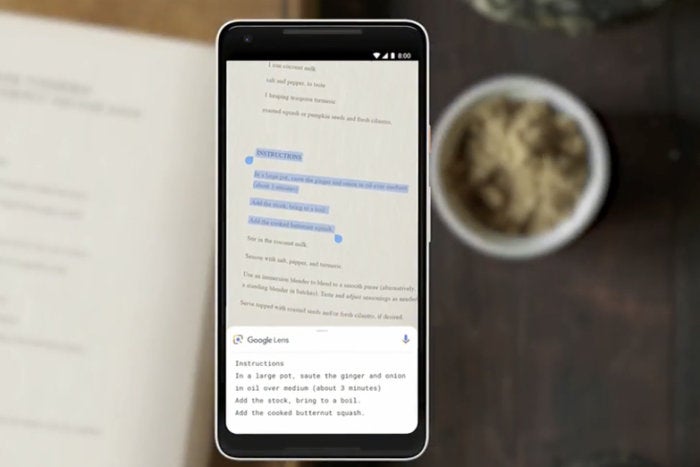

Smart text selection

Back when I was a graduate student, I often found myself wanting to easily copy text from books, and plug that material into research papers as excerpts. It seems as though I was simply born too late.

To wit: One of Google Lens’ best new features lets you select and render text simply by aiming your camera at it, almost as if you’d used your mouse to select it on a regular monitor. Presumably—accounting for possible problems with line breaks and spacing—you then simply paste the text into a text message or document.

Google

Google

Amazingly, you can even choose which specific text you want to select.

If this pans out as demo’d, it’s going to save so much time. For example, you could use Lens to capture the ingredients from a cookbook, and then send them via text to a friend buying groceries at the store. Or if you’re reading a menu written entirely in French, you could use Lens to get the precise definition for ris de veau, along with a photo of what the dish looks like as well as a description of its ingredients. This is information you need to know.

Granted, in some situations Google Lens technically adds an extra step since many of us are used to simply sending photos of printed text to friends anyway. But I could certainly see Lens being unbelievably useful for research cases, international travel, and many other scenarios.

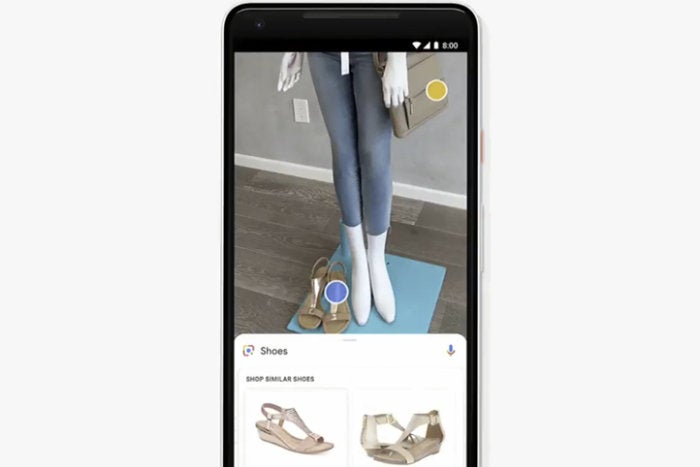

Style Match

I have a lamp beside my bed that I love, but I’ve had a rough time finding a matching lamp for the other nightstand. Thanks to Google Lens’ Style Match feature, that may no longer be a problem.

Google

Google

There’s no more need to fret when your friend can’t remember the name of the shop where she bought her cool shoes.

With Style Match, you simply aim your camera at an item and it shows you suggestions for other items that look just like it. In one of the examples shown on stage, looking at a lamp with an intricate base brings up a Shopping search for “Lamp,” and the images Google delivers show different lamps with similarly intricate bases along with prices. And they all look quite similar to the lamp that started that search.

Google introduced a similar feature for regular Google Image Search last year, but being able to see results like this in real time through a camera app makes it significantly more useful.

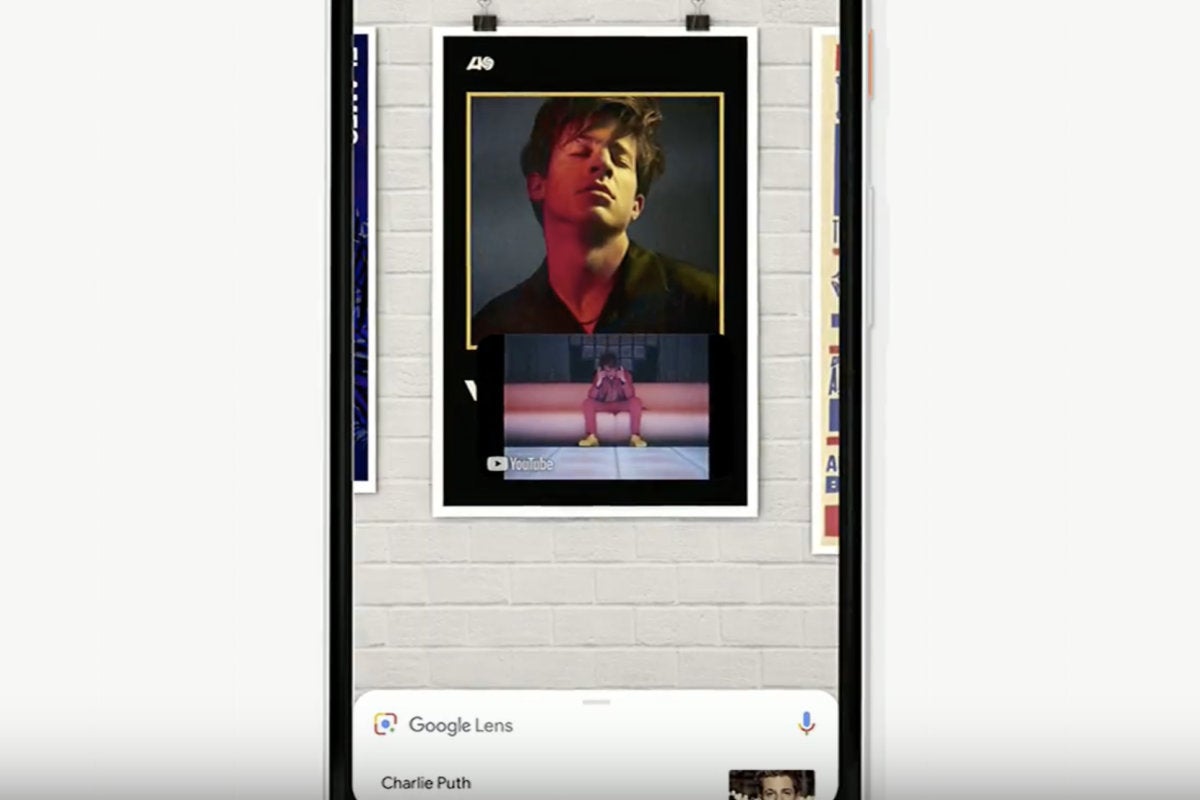

Real-time search results

As Google Lens works at the moment, you have to specifically choose an item on the display and wait for Google to bring up search results for you. But the updated Lens will give you search results related to objects you’re looking at in real time. That means that if I’m standing on the Michigan Avenue Bridge in Chicago, I can look over at the Wrigley Building to the left and get information about its height and history in one second, and then I can pan over to the right side of the street and get similar information about the Tribune Building. It’s going to make solo tours so much more fun.

Google

Google

All on one screen, you can see the poster in the real world, a music video, and further information about the artist.

And that’s not all, thanks to improvements in machine learning. If you’re aiming your camera lens at a concert poster, Google can start playing a music video by the featured artist. It’s taking WYSIWYG to a whole new level.

Support for third-party camera apps

Google Lens was originally only available for the Pixel phones, but earlier this year Google extended support to all Android phones through the Photos app and Google Assistant. Soon, though, owners of some Android smartphones made by companies other than Google will get to enjoy the power of Lens straight through their native camera apps.

Google

Google

Samsung and Huawei likely aren’t included because they already have their own AI camera features in their apps.

This move will extend to default third-party camera apps from Sony Mobile, HMD/Nokia, LGE, Xiaomi, HMD/Nokia, Transsion, TCL, OnePlus, BQ, and Asus. (Samsung and Huawei already have their own AI camera features in their apps.) Here’s to hoping it works well with all of them. Google Lens is an incredible service, and making it more easily available to everyone is a step in the right direction for a better internet.