It’s the start of a new era of competition. Today, Intel’s debut Arc A770 and A750 GPUs had their curtain drawn fully back, heralding the company’s long-teased entry into discrete consumer graphics cards. Watch out, Nvidia and AMD. Chipzilla’s in the fray now, fueled by its new Xe HPG (High-performance gaming) GPU architecture.

Intel took an unusual (but strategically smart) approach to Arc’s debut, initially rolling out Arc 3 graphics for modestly priced portable laptops, before introducing a similarly modest Arc A380 desktop GPU in China this summer. Doing so allowed Intel to leverage its substantial strengths in notebooks and software support rather than going blow-for-blow with Nvidia and AMD on the desktop, and let the company spend months providing some much-needed driver polish.

We’ve covered the Arc 3 laptop GPU reveal and Intel’s killer features in a separate piece that explains what everyday folks should expect from this new breed of laptop. And now, we know how Arc 7 desktop graphics cards perform as well. (Spoiler alert: Sometimes it smashes, and sometimes it stutters—literally, if you don’t have PCIe Resizable BAR enabled).

That’s not the point of this article though. As part of the various reveals, Intel Fellow Tom Peterson gave the press a high-level overview of the Xe HPG architecture underpinning these Arc “Alchemist” graphics cards, providing a glimpse at the nuts and bolts powering Intel’s discrete graphics ambitions.

So, as we did with Nvidia’s Ampere and AMD’s RDNA 2 architectures, here’s a brief technical explainer on the innards of Intel Arc’s Xe HPG chips. Much the way Nvidia and AMD use different technologies and terminologies for their designs, Intel’s Arc chips rely on some proprietary concepts (including a new take on clock speeds that needs some explaining). That makes it difficult to compare Arc against rival GPU architectures—Intel doesn’t even use common terms like ROPs and TMUs—but by the time we’re done here, you’ll have a solid high-level understanding of what makes Xe HPG tick. Let’s dig in.

Meet Xe HPG

Intel

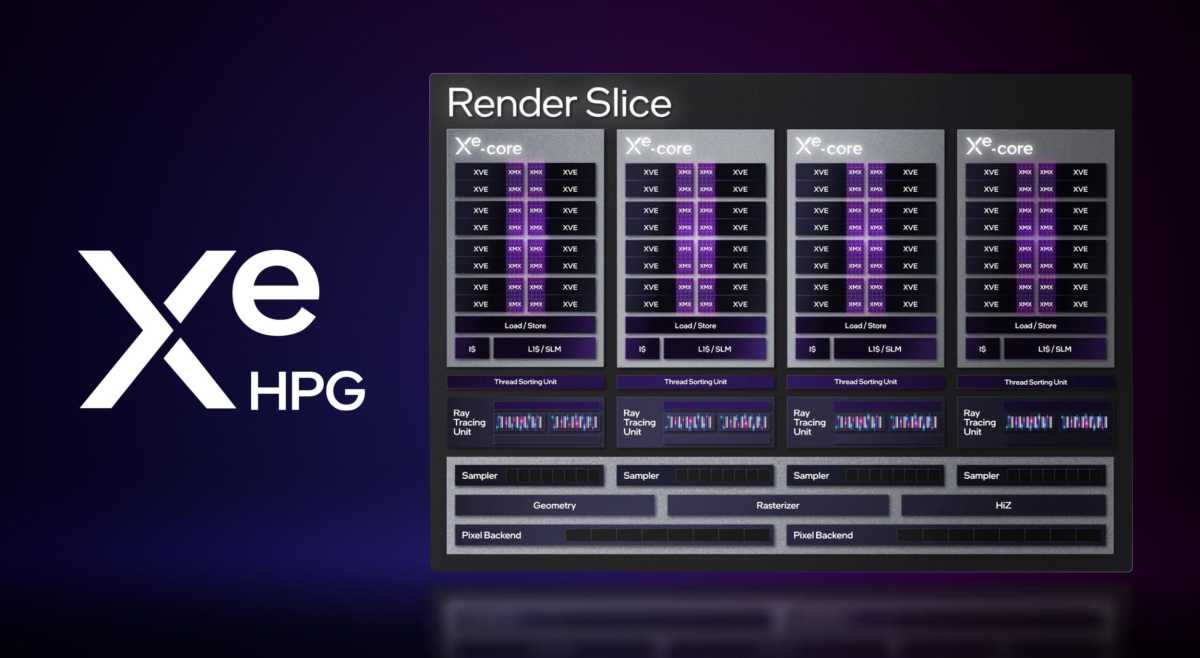

For Intel, Xe HPG “render slices” comprise the backbone of every Arc GPU. Intel’s laptop and desktop Arc offerings can be scaled up or down as needed to fit different market needs, but these render slices are at their heart, containing dedicated ray tracing units, rasterizers, geometry blocks, and the fundamental building block for Arc, the Xe Cores themselves. Xe XPG can scale all the way up to eight render slices in the flagship Arc A770.

Each render slice contains four Xe cores and four ray tracing units, along with all the other bits necessary for running a modern GPU. These render slices are fully DirectX 12 Ultimate compliant, meaning Intel’s Arc GPUs can handle ray tracing, Variable Rate Shading, Mesh Shading, and all the other features associated with that standard.

Intel

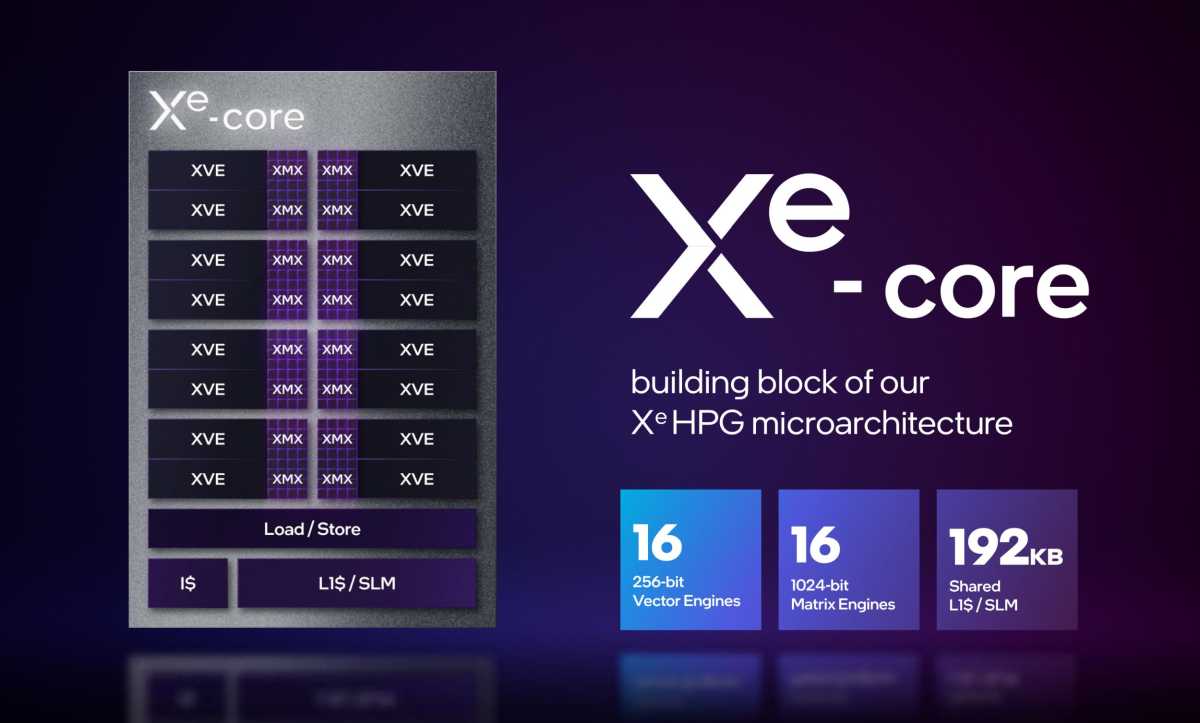

Let’s go deeper and take a peek at the Xe cores themselves. Each Xe core (again, there are four per render slice) is comprised of three key bits: 16 256-bit “XVE” vector engines that handle more traditional rasterization tasks, 16 1024-bit “XMX” matrix engines that handle machine learning tasks (like the tensor cores in Nvidia’s rival RTX GPUs), and 192KB of shared L1/SLM cache. That cache can be used to hold tasks during compute workloads, or shaders and textures while gaming.

Intel

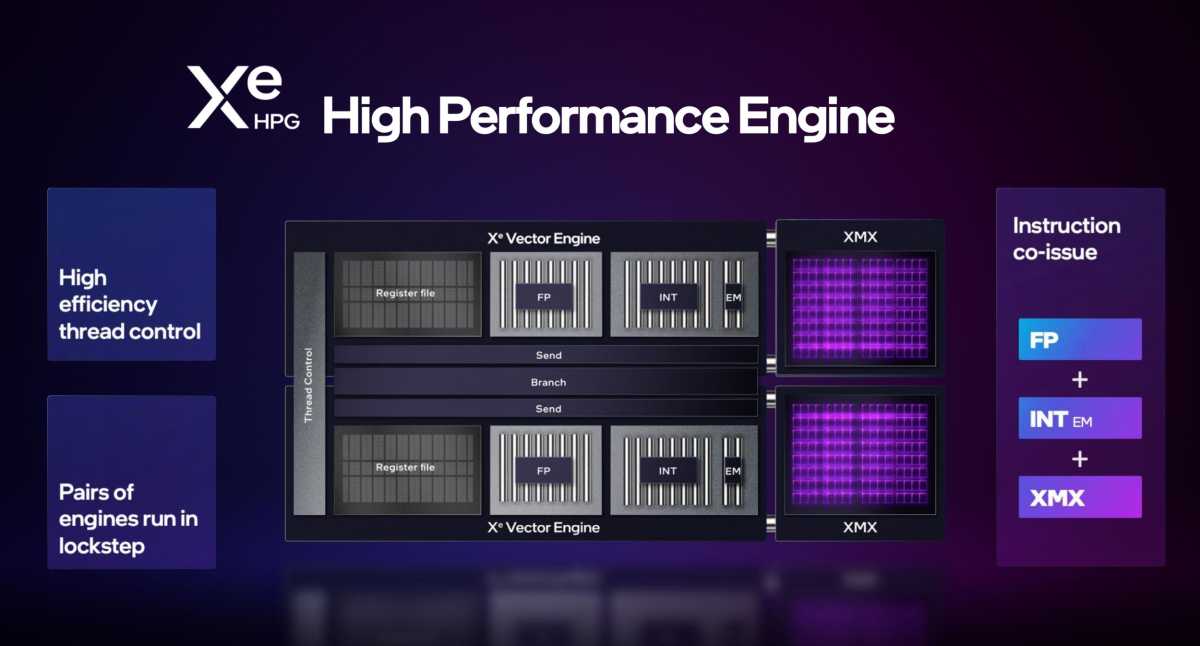

The biggest companies in PC gaming may be betting big on ray tracing being the future of graphics—each Xe Core includes a specialized Thread Sorting Unit designed to help shaders process willy-nilly bouncing ray tracing data more efficiently, for example—but traditional rendering remains king for now. Each Xe Vector Engine includes a dedicated floating point (FP) execution port to handle traditional shading tasks, along with a shared INT/EM port that can tackle integer-based tasks at the same time.

Nvidia introduced concurrent FP/INT pipelines with its RTX 20-series “Turing” architecture to keep integer tasks from clogging up the FP32 pipeline, and it’s become the norm since. “When Nvidia examined how real-world games behaved, it found that for every 100 floating point instructions performed, an average of 36 and as many as 50 non-floating point instructions were also processed, jamming things up,” we wrote in 2018. “The new integer pipeline handles those extra instructions separately from and concurrently with the FP32 pipeline. Executing the two tasks at the same time results in a big speed boost.”

Intel

Intel’s dedicated “XMX” matrix engines hook into the vector engines in each Xe Core. They’re broadly similar to Nvidia’s RTX tensor cores, designed to greatly accelerate machine learning tasks. These are the bits that unlock the potential of XeSS, Intel’s rival to Nvidia’s vaunted DLSS upsampling, as well as other special sauce features like Hyper Compute and the virtual camera feature in Intel’s new Arc Control command center. (Again, read our Arc laptop GPU coverage for deeper insight into those consumer-level features.)

Intel

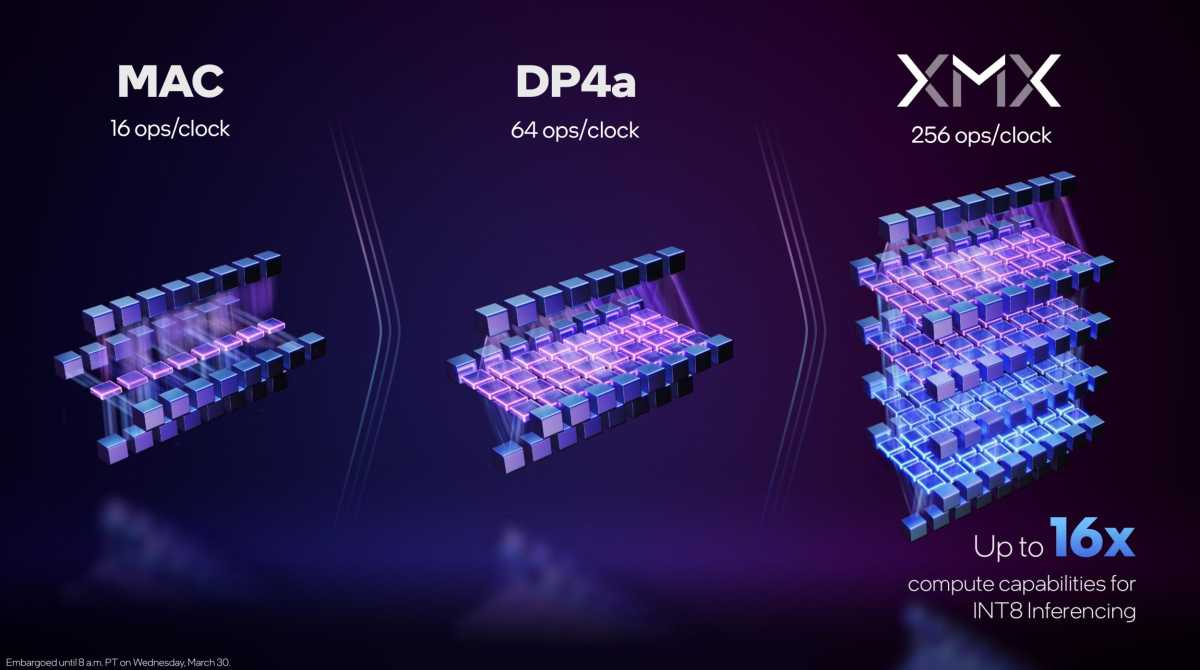

When tapped by compatible software (such as a game with XeSS or an app that supports Hyper Compute), the XMX core’s 4-deep systolic array can calculate up to 256 multiply accumulate (MAC) operations per clock for INT8 inferencing, a massive increase over the 64 ops/clock offered by modern GPUs with DP4a hardware on board, and the 16 ops/clock supported by older GPUs.

Intel’s XeSS supports a fallback mode to run on rival Nvidia and AMD graphics cards that lack XMX cores, defaulting to DP4a hardware instead. This picture illustrates very well why Intel says XeSS runs much, much faster on Arc GPUs with XMX hardware inside.

Intel

Each Xe Core features 16 total Vector and Matrix engines, with pairs of each running in lockstep, able to run FP, INT, and XMX tasks all at the same time. Arc GPUs can be kept very, very busy indeed. The full extent of that busyness, and a deeper dive into how Xe HPG handles complex ray tracing tasks, can be found in the Intel explainer video below.

Xe HPG media engine and AV1 encoding

Intel has always been proud of its media engines, spearheaded by the lightning-fast QuickSync technology, and the Xe XPG’s media engine is no different. It includes all the modern capabilities you’d expect in a graphics chip—various 8K HDR encode and decode support, HEVC, VP9, you name it—but also one big inclusion that no other chip (CPU or GPU) offered when Arc was announced: hardware-accelerated AV1 encoding. (Nvidia’s GeForce RTX 40-series will also support AV1 encoding, however.

Intel

The highly efficient next-generation video standard was created by a consortium of industry giants and is rapidly moving towards becoming the norm, and modern desktop GPUs support AV1 decoding that can help you watch 8K videos without your system setting itself on fire, but until now you needed to use software alone to actually create AV1 videos.

Intel says that the hardware-accelerated AV1 creation unlocked by Arc is 50 times faster than software encodes, or it’s capable of delivering much clearer streaming visuals at the same bitrate as other encoders. We’ve tested Arc’s AV1 chops and discovered it indeed puts Nvidia and AMD’s traditional encoders to shame. (Yes, even NVENC.)

Paired with the Hyper Encode feature offered in all-Intel laptops and desktops as part of the company’s Deep Link suite, which leverages the media engines in both the CPU and GPU rather than one or the other, Arc-based systems could prove terribly compelling for video creators.

Xe HPG display engine

Intel

The Xe HPG display engine remains consistent across the Arc GPU stack, meaning every Arc graphics card offers the same video output capabilities (though the exact port configuration will vary by model). Don’t expect good frame rates if you actually try gaming on a pair of 8K screens, but it’s good to know Arc will support it if you want all the pixels for your productivity tasks!

Meet the Intel Arc A-series GPU lineup

Intel

Let’s take a moment to bring all this technical talk back to the practical realm. Intel cobbled together a bunch of Xe cores and render slices into a pair of dedicated Arc “Alchemist” GPUs: the higher-end ACM-G10, which powers the flagship Arc 7 graphics options, and the more modest ACM-G11, which appears in Arc 3 laptops and desktop GPUs.

Intel

Intel

From there, those GPUs can be sliced and diced to meet different market needs. The charts above show how the first generation of Arc graphics for laptops shook out.

Xe HPG graphics clock speeds

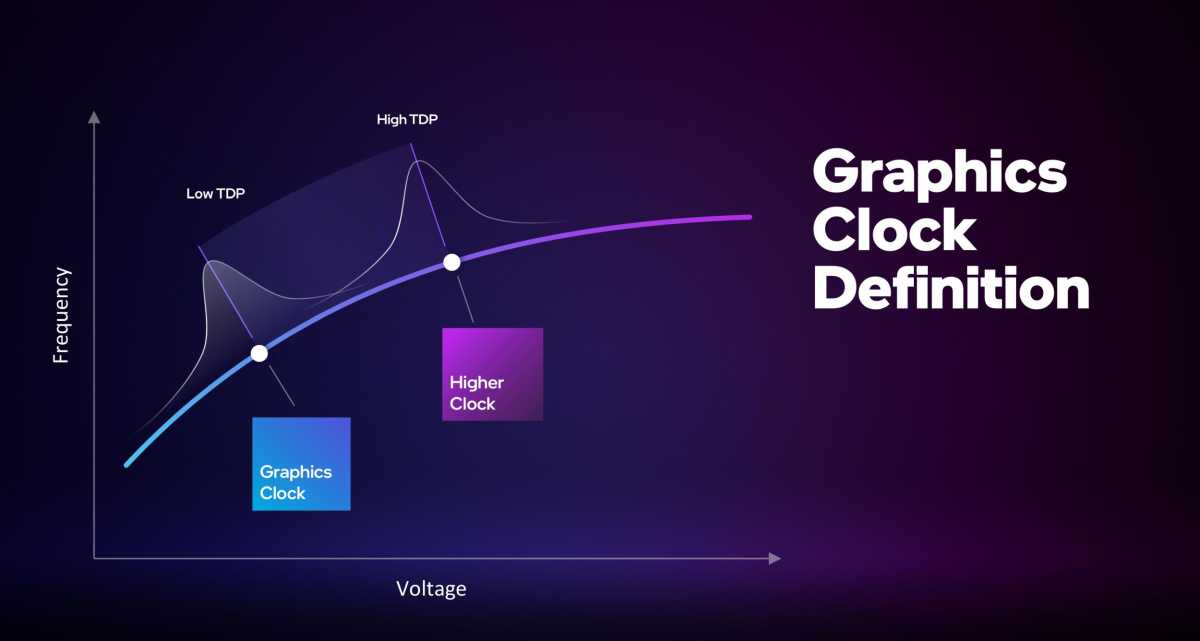

Something might have jumped out at you in those laptop GPU spec charts above: their ultra-low clock speeds. (The desktop GPUs run much faster, and much more typically.) In an era where Nvidia’s GPUs push 2GHz and some AMD GPUs clear 2.5GHz, seeing Intel’s Arc mobile topping out at 1650MHz and going as low as 900MHz is a tad eye-raising. Clock speeds between rival graphics brands aren’t as clear cut as they seem, however.

Intel

AMD’s “Game Clock” for Radeon GPUs isn’t the same as Nvidia’s “Boost Clock,” as I’ve explained before. Intel is using yet another metric for its Arc GPUs, dubbed “Graphics Clock.” Petersen defined Intel’s Graphics Clock as the average clock speed of typical light and heavy workloads that particular GPU was intended for (so gaming for He XPG and likely compute tasks for workstation cards, for example). If you look at the laptop GPU charts above, you’ll also see a range of TDPs defined for each; the Graphics Clock is based off the lowest available TDP. In other words, Intel’s Graphics Clock for laptop graphics essentially represents almost a worst case scenario for Arc GPUs. (Desktop GPUs used a fixed power budget and behave much more typically, of course.)

Intel

All that said, graphics cores can run at different speeds depending on how hard they’re being pushed—they’ll hit much higher speed in 2D retro games and much lower speeds in complex modern games that hit every part of the Xe Core and Render Slice, for example. And wattage can make a massive difference to performance as well; as we’ve seen with Nvidia’s mobile GeForce offerings, pumping more juice into a GPU can help propel a lower-tier GPU past a low-watt version of an ostensibly more potent sibling.

It’s also worth noting that clock speed isn’t everything. In the same company’s architecture, faster is generally better—a 2GHz GeForce GPU will be faster than a 1.5GHz one, say. But AMD’s desktop Radeon RX 6500 XT lags behind its siblings despite packing a ludicrously fast 2.8GHz clock speed. Raw clock speed gains are far from the only way to drive faster performance, as AMD’s Robert Hallock once explained on our Full Nerd podcast. That company’s Ryzen 7 5800X3D processor actually saw big gaming performance gains by dropping clock speeds and plopping a huge slab of cache atop the chip.

It’s complicated, is what I’m saying.

But wait, there’s more!

Brad Chacos/IDG

And that about does it for our tour of Intel’s Xe HPG architecture. If all this talk about matrix engines and media encoders got you hot and bothered, be sure to check out our Intel Arc A770 and A750 graphics card review for a deep dive into how all these technical tidbits manifest in reality.

Arc performs very differently than its rivals, for better and sometimes for worse, and Xe HPG is the engine driving it all. Intel’s Arc A750 and A770 Limited Edition hit store shelves on October 12.