The Nvidia GeForce RTX 4080’s slow-paced announcement and eventual launch has been… a fascinating road. From its mis-named little brother getting “unlaunched” to the RTX 4080 Founders Edition being the same size as the massive RTX 4090 for some reason, to the melting 12VHPWR power connectors—which are still on this card, yes. Nvidia has quite the PR battle with the $1,200 GeForce RTX 4080, but if you ignore all the noise, does this new card deliver the goods for content creators?

Thankfully, performance-wise it does mostly live up to the hype; I just find myself wishing we had higher VRAM capacities on such expensive graphics cards.

This review is focused on work, instead of play, similar to my recent RTX 4090 content creation analysis. Photo editing, video editing, encoding, AI art generation, 3D and VFX workflows—stuff I use every day for my EposVox and analog_dreams YouTube channels. Look for PCWorld’s gaming-focused RTX 4080 review to land tomorrow, or check out our high-level holistic look at 5 must-know RTX 4080 facts from our extensive gaming and creator testing right now.

Let’s dig in.

Our test setup

Most of my benchmarking was performed on this test bench:

- Intel Core i9-12900k CPU

- 32GB Corsair Vengeance DDR5 5200MT/s RAM

- ASUS ROG STRIX Z690-E Gaming Wifi Motherboard

- EVGA G3 850W PSU

- Source files stored on a PCIe gen 4 NVMe SSD

This time, my objectives were to see how well the new RTX 4080 stood its ground against the last-generation GeForce RTX 3080 and RTX 3090. At $1,200, the RTX 4080 needs to really out-shine the RTX 3090 (which can now be had for $1,000 to $1,200 on average)—but then if it does, the question becomes whether the RTX 4090 is warranted at all.

For some more hardcore testing later, as I’ll mention, testing was done on this test bench:

- AMD Threadripper Pro 3975WX CPU

- 256GB Kingston ECC DDR4 RAM

- ASUS WRX80 SAGE Motherboard

- BeQuiet 1400W PSU

- Source files stored on a PCIe gen 4 NVMe SSD

- Each test featured each GPU in the same config as to not mix results.

Photo editing

I’m going to keep testing this just because there’s constant “little surprises” in performance in photo apps—even if, realistically, you’re not going to get any major gains upgrading from one high-end graphics card to another.

Adam Taylor/IDG

Adam Taylor/IDG

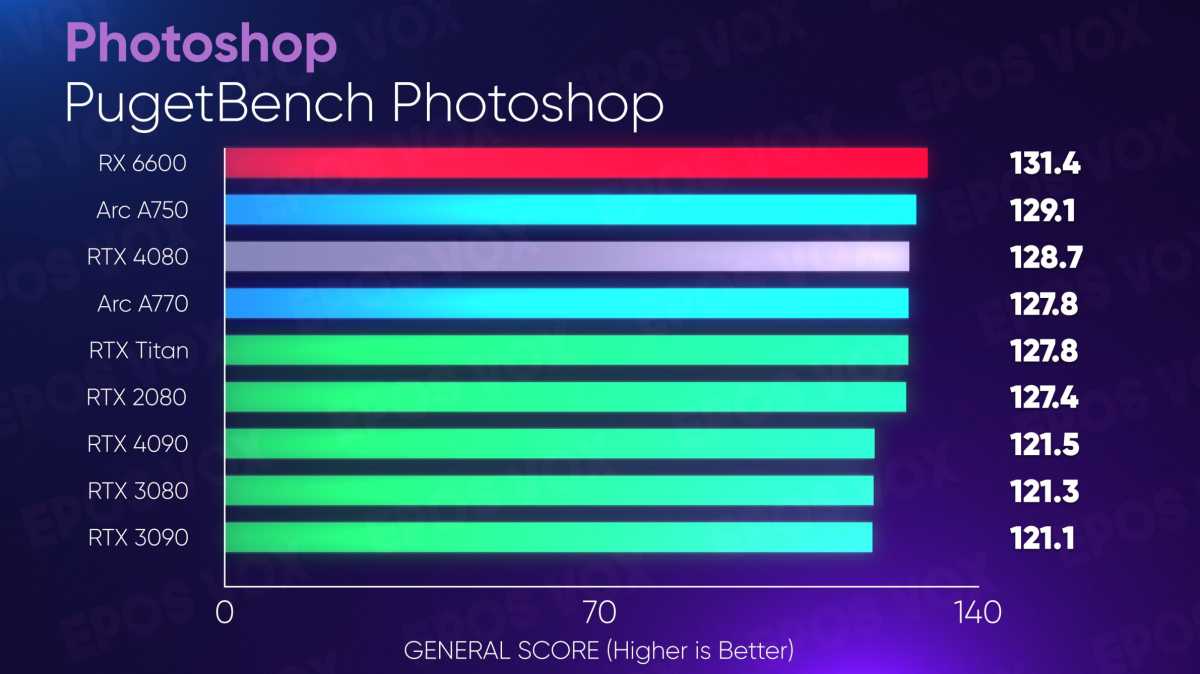

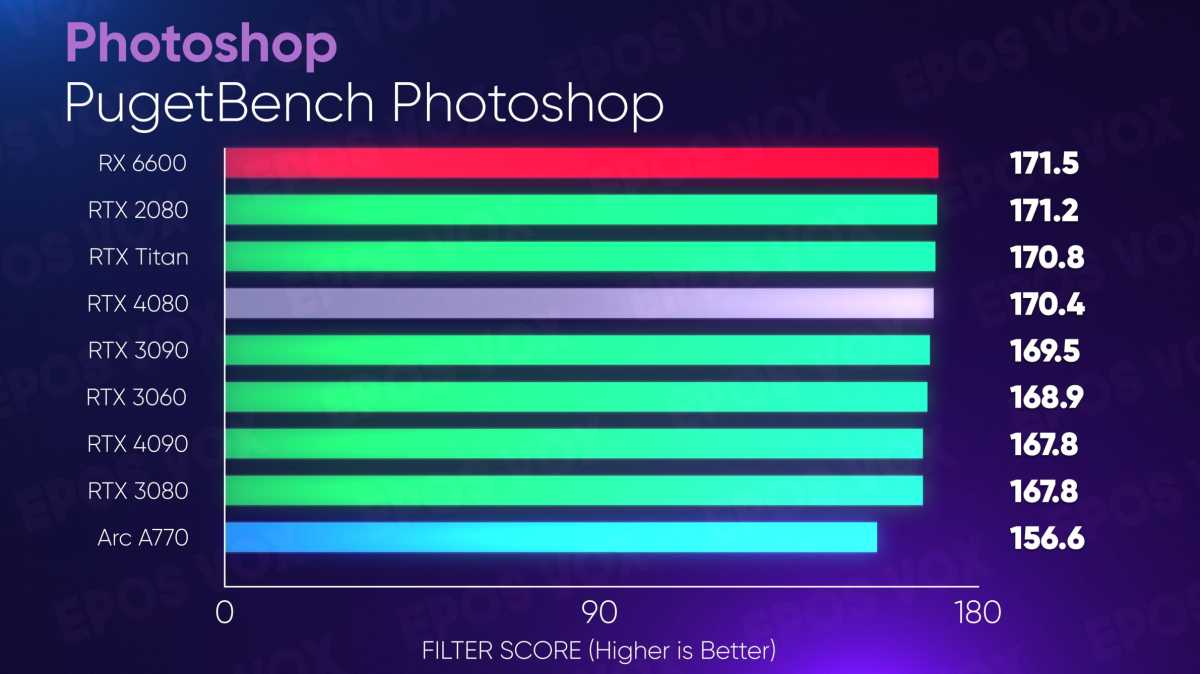

The GeForce RTX 4080 tops the charts for most scores in Adobe Photoshop (other than the AMD Radeon RX 6600’s weirdly high performance… perhaps we need to get some more AMD cards in the house for an AMD photo editing deep dive). Photoshop was tested using the PugetBench test suite, provided by the awesome workstation builders over at Puget Systems.

Adam Taylor/IDG

Adam Taylor/IDG

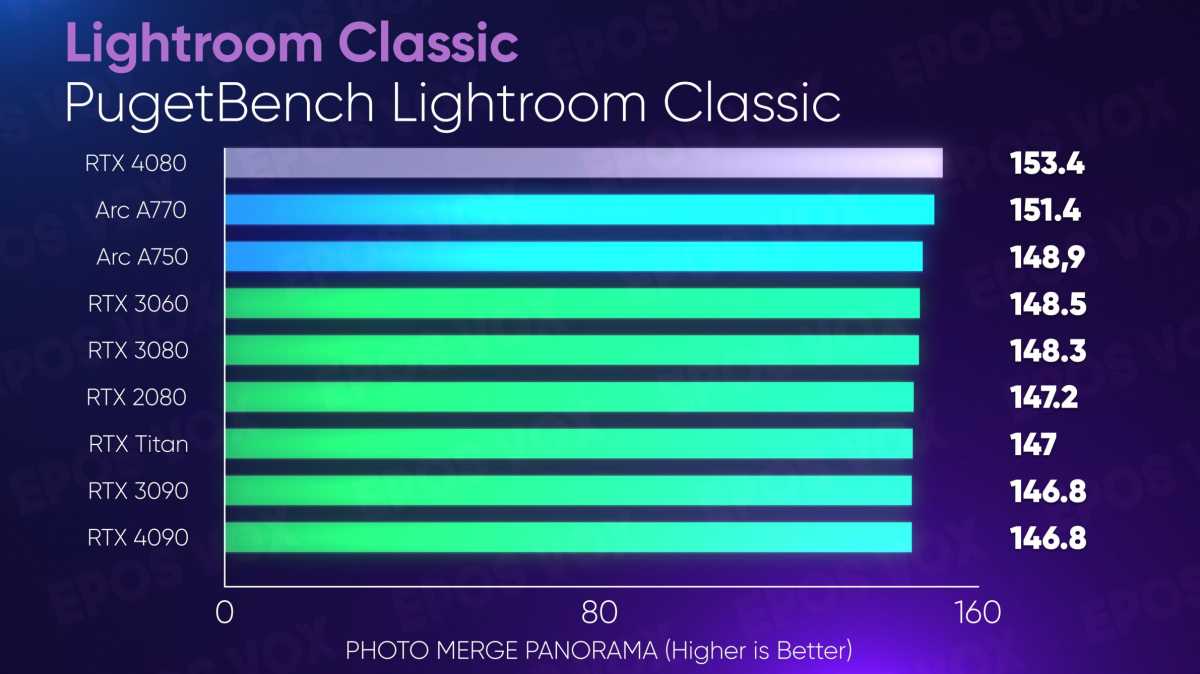

In Lightroom Classic (also using PugetBench), the RTX 4080 beats out even the 4090’s overall score, but for most tasks, you’ll see marginal gains over the RTX 3080 and 3090, and right up against the 4090’s performance.

Adam Taylor/IDG

Adam Taylor/IDG

Adam Taylor/IDG

Adam Taylor/IDG

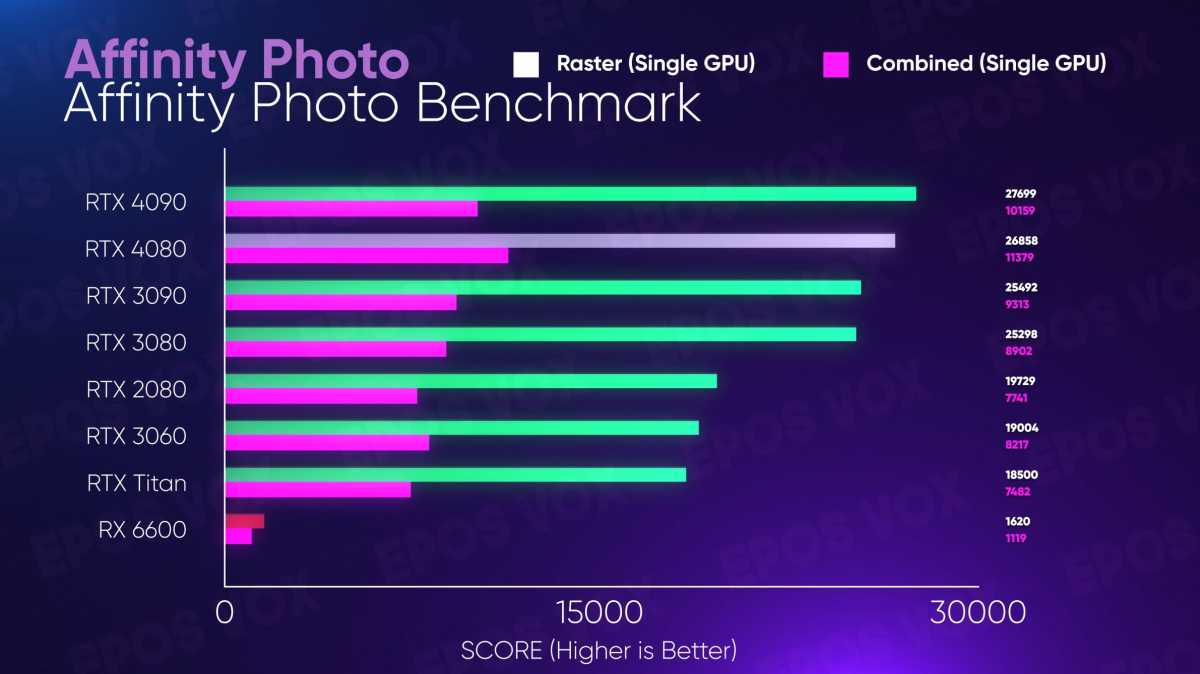

Benchmarking Affinity Photo (I know V2 just came out… let’s keep it consistent for now) the RTX 4080 slots perfectly between the RTX 3090 and 4090, as it should.

Adam Taylor/IDG

Video editing

Adam Taylor/IDG

Adam Taylor/IDG

Adam Taylor/IDG

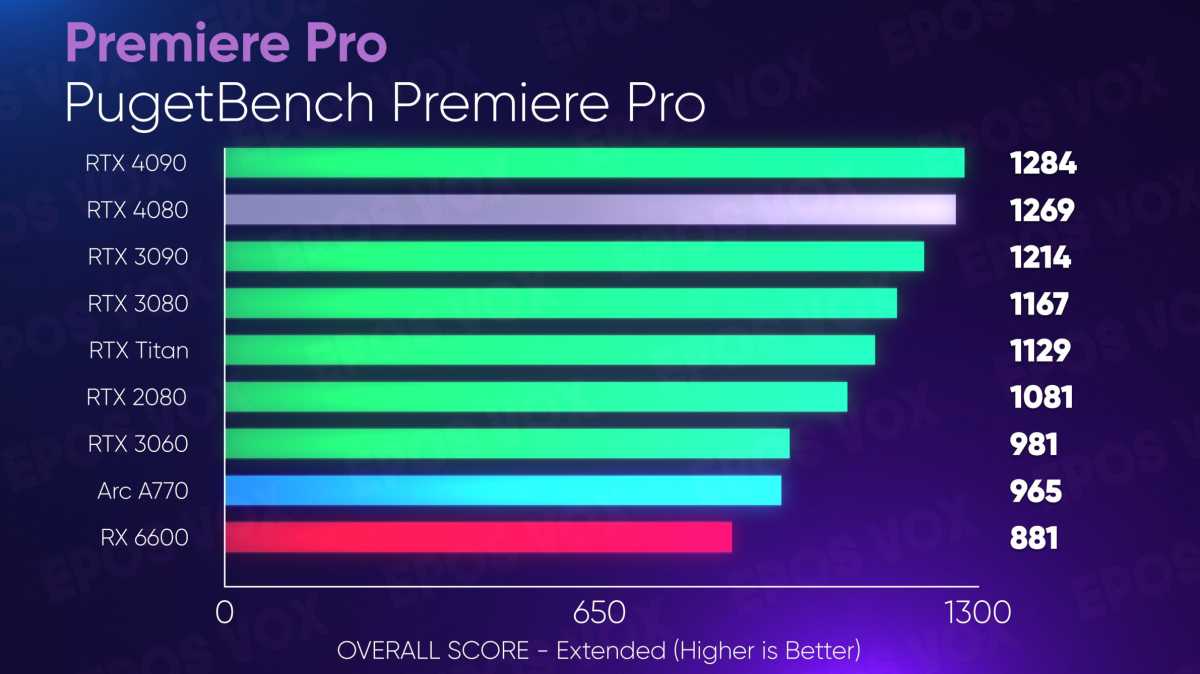

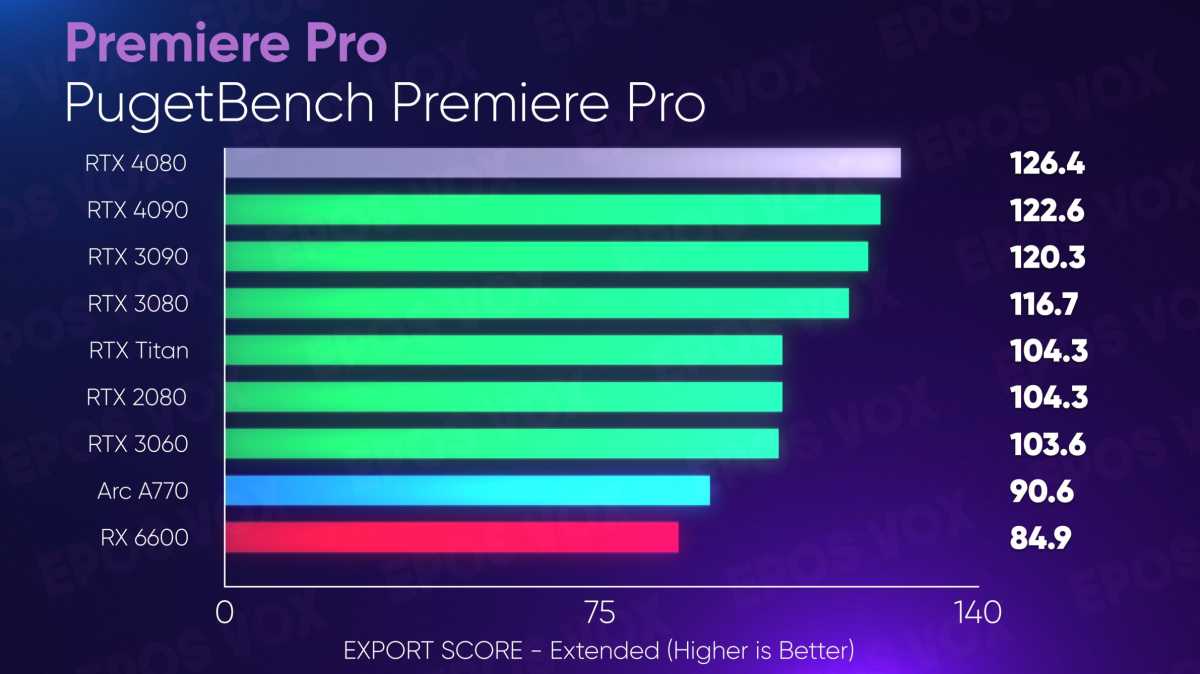

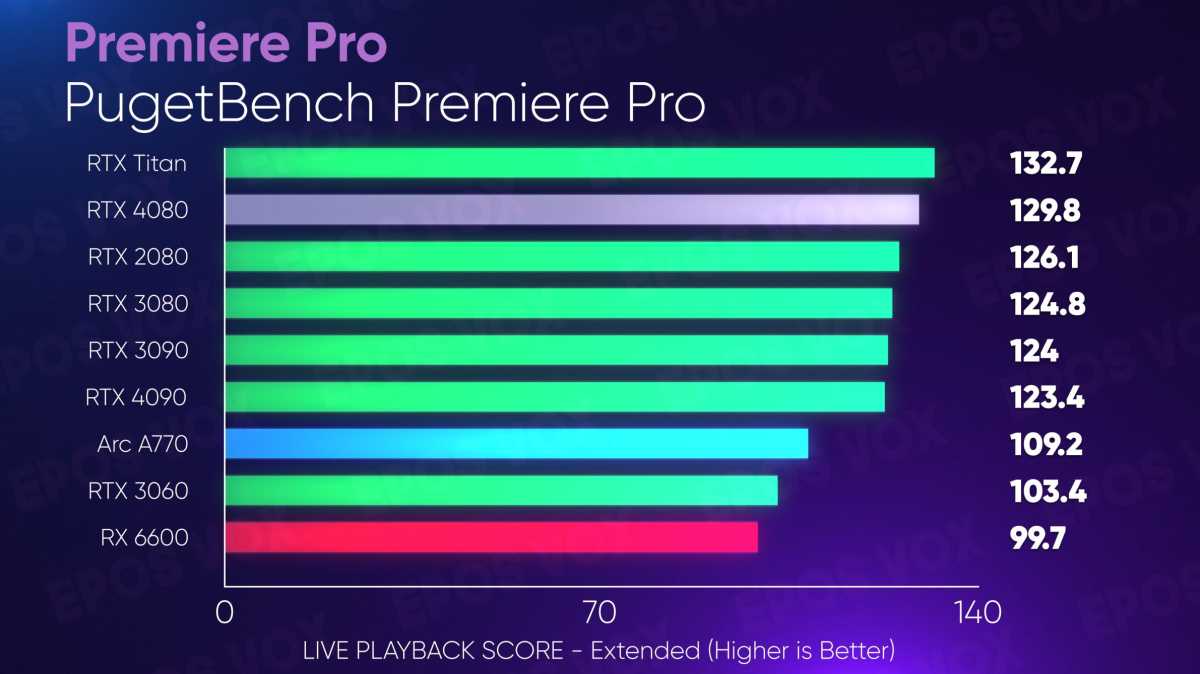

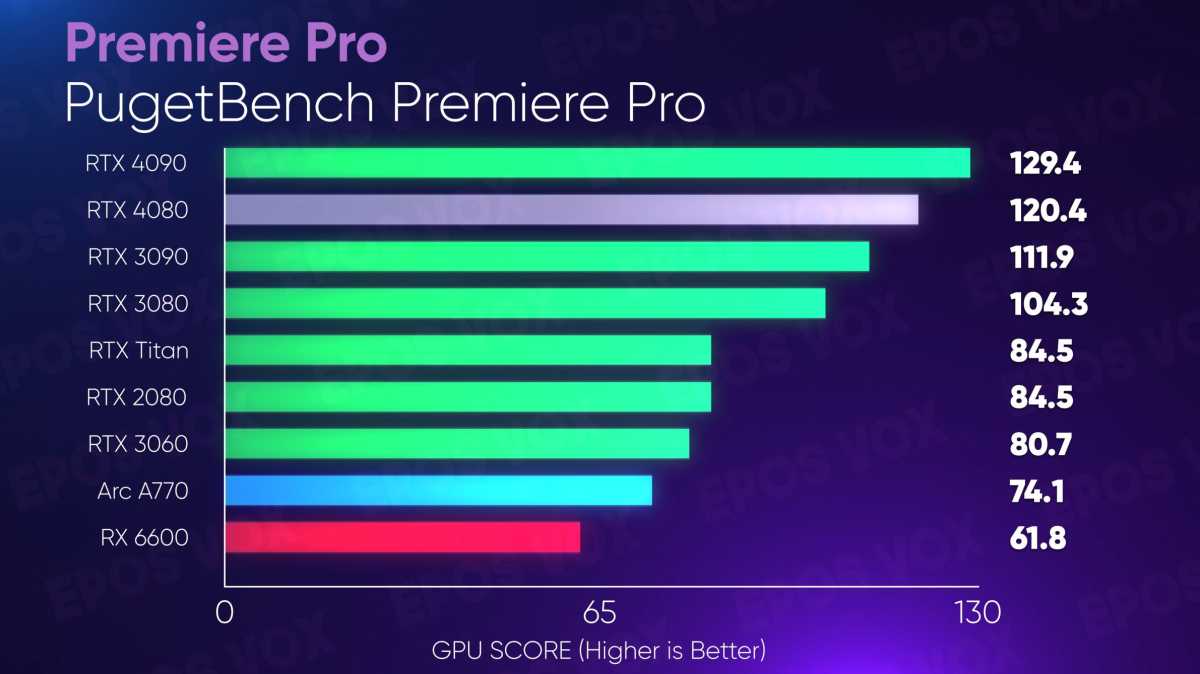

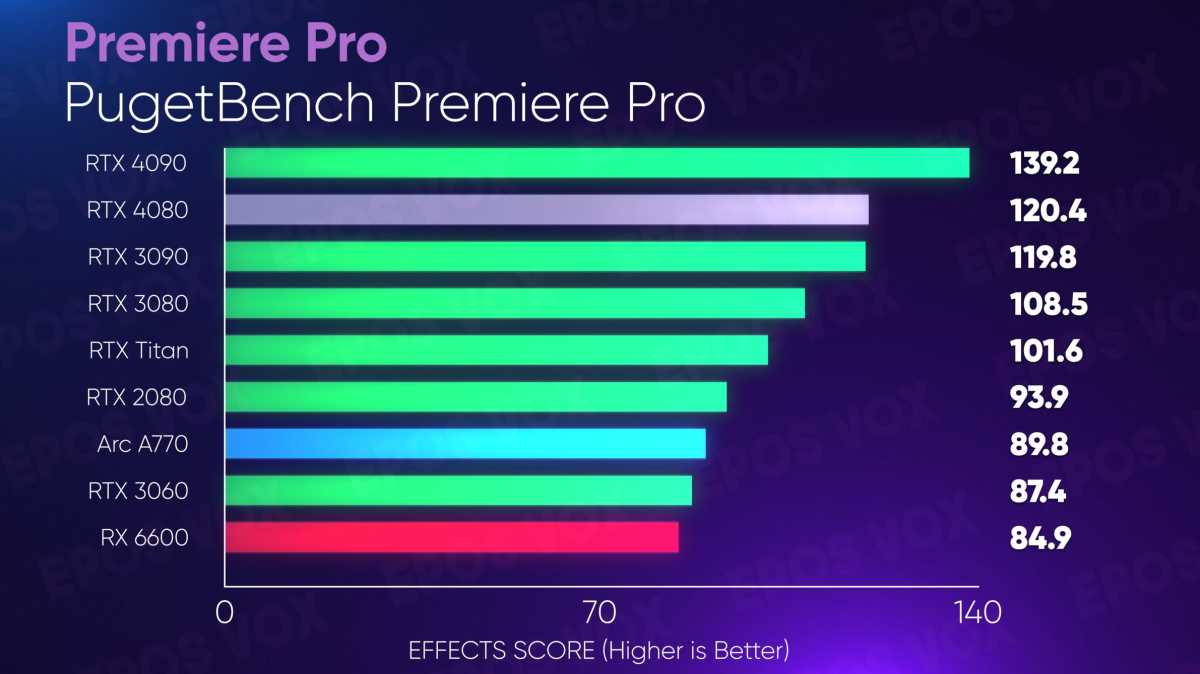

Using PugetBench for Adobe Premiere Pro sees the new RTX 4080 land a fair bit higher than the RTX 3090, just under the RTX 4090. Interestingly, export and playback scores were slightly higher than the RTX 4090, meanwhile the Effects and GPU scores were lower. If you’re doing GPU-heavy workloads at 4K and higher, you’ll absolutely want a 4090, but most video editors will be just fine with a 4080.

Adam Taylor/IDG

Adam Taylor/IDG

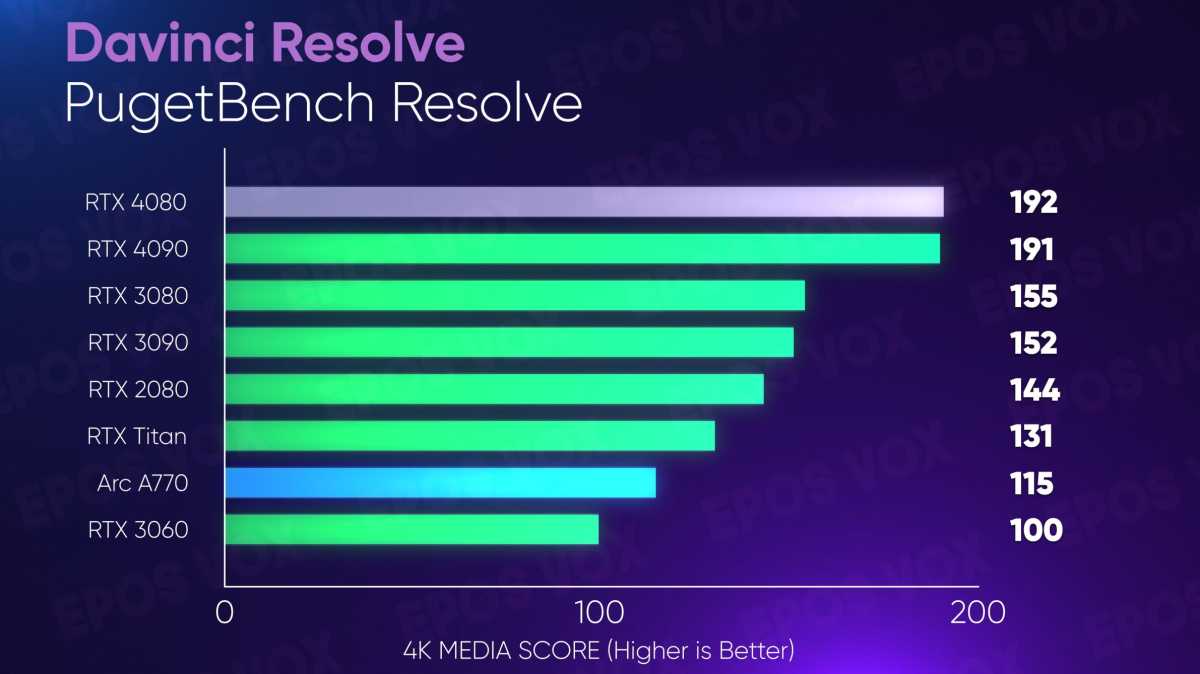

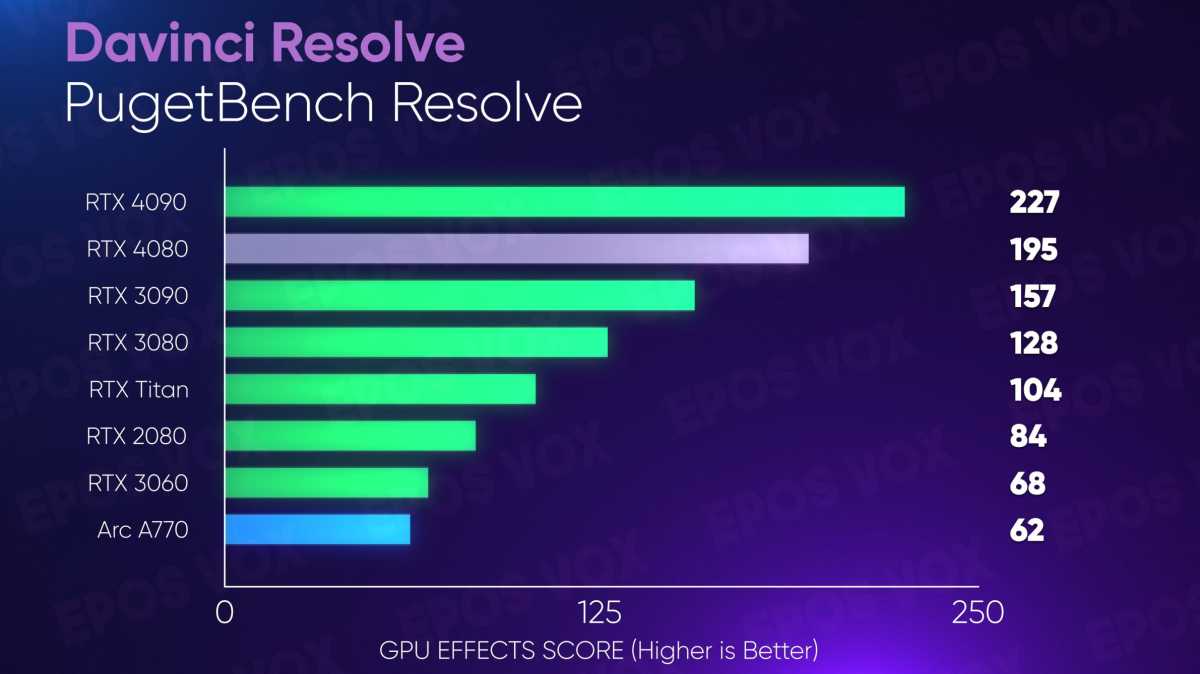

This carries over into BlackMagic DaVinci Resolve. Resolve just got a new update to version 18.1—a massive update bringing AI audio cleanup, new fusion features and finally fixing fractional scaling issues on Windows, along with performance boosts.

Adam Taylor/IDG

Running PugetBench, we see the GeForce RTX 4080 put up pretty substantial gains—gains you would feel—over last gen’s RTX 3080 and even the 3090. This is seriously impressive stuff. If you were considering a 3090 or RTX Titan for video editing before around the $1,000 price range, I’d honestly consider throwing in an extra couple hundred dollars to get this boost plus AV1 encoding! If you were eying a 3080 or 3070 instead, that’s a much tougher sell given the higher cost.

Adam Taylor/IDG

Adam Taylor/IDG

Adam Taylor/IDG

Adam Taylor/IDG

Adam Taylor/IDG

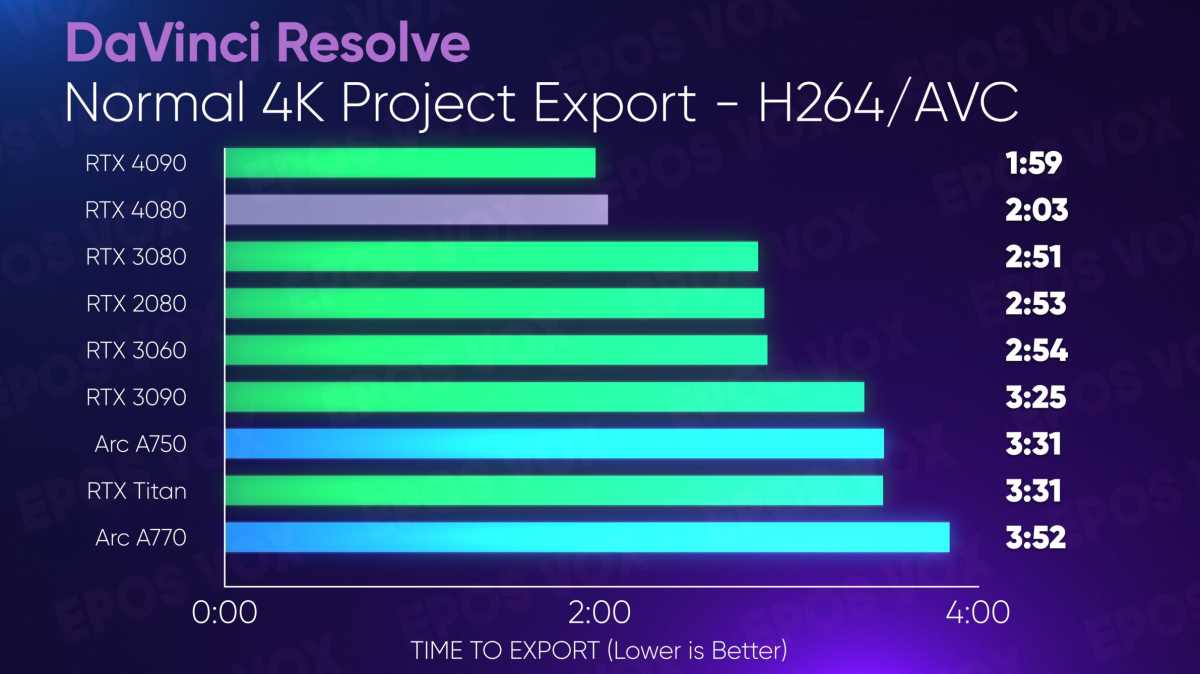

Exporting a sample 4K project, the RTX 4080 and RTX 4090 run laps around the older cards in H265 and AV1 compared to Arc. They’re still faster than older cards in H.264 too, but by much smaller margins.

Adam Taylor/IDG

Throwing the card in my Threadripper Pro build to text my intense 8K project full of 8K RAW footage, Resolve SuperScaled 4K footage, plugins and effects… unfortunately the 4080 couldn’t export this, just like the previous 80-tier cards I tested. 16GB of VRAM still isn’t enough, I suppose—plugins error out, color graded footage takes longer than the 4090, and so on. 8K is tough, but for $1,200 I would hope at this point to start to see higher VRAM capacities when Intel is handing out $350 Arc graphics cards with 16GB. Strange times.

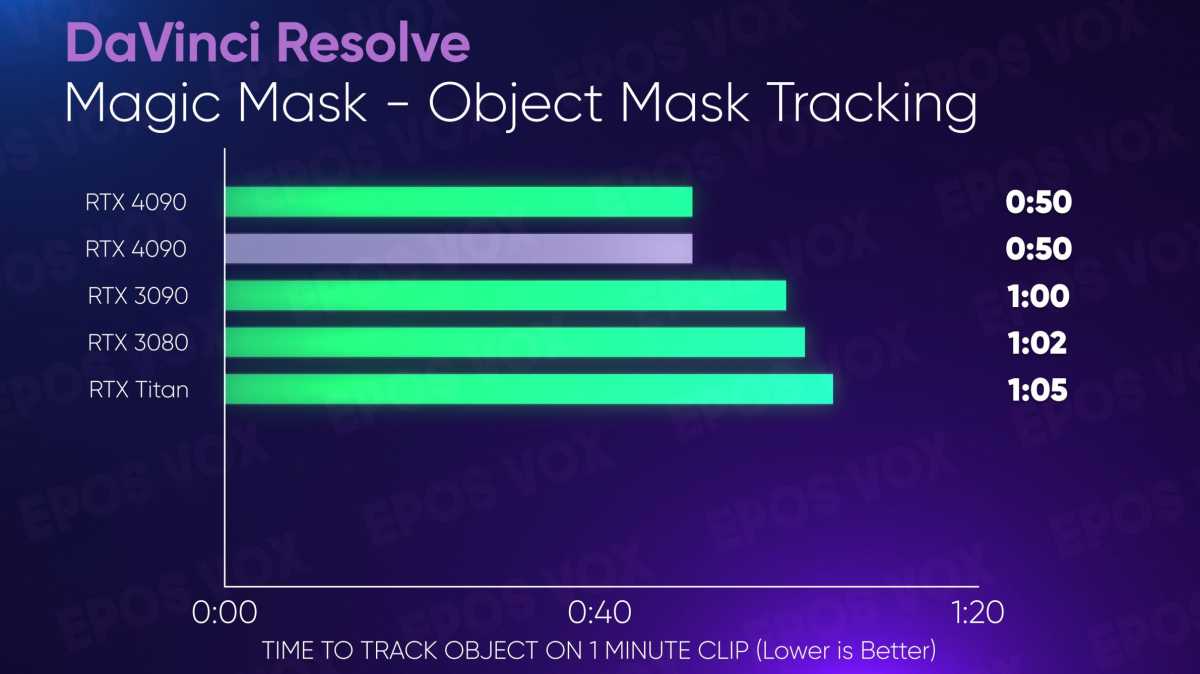

One of the big improvements with Resolve 18.1 is speed increases to AI-based Face Tracking and object masking. The beta build of Resolve I had access to for my RTX 4090 review was supposed to have performance boosts for this, too—but from what I can tell the Face Tracking updates weren’t working right because it’s significantly fasterin 18.1 compared to even that preview build.

The time taken to face track on 8K RAW footage in a 4K timeline dropped by 45 percent across all tested GPUs. Even the older Ampere and Turing cards moved faster for this typically very slow task—but Lovelace just runs away with it.

Adam Taylor/IDG

The RTX 4080 tracks just barely slower than the 4090, but both leave the Ampere cards in the dust. Weirdly, the RTX Titan is in a close third behind the new cards—perhaps some added AI magic in the Titan hardware, or better floating point performance advantages? I’m not sure.

Adam Taylor/IDG

Object masking didn’t improve as much with older generations, but the GeForce RTX 4080 matches the 4090 here and puts up 17 percent faster speeds than older cards. Very rad. Huge shouts out to the Resolve team for nailing such a big update, and if you do video editing every day like I do, this new generation is going to earn your time back fast. I’m so tired of waiting on things.

AI wizardry

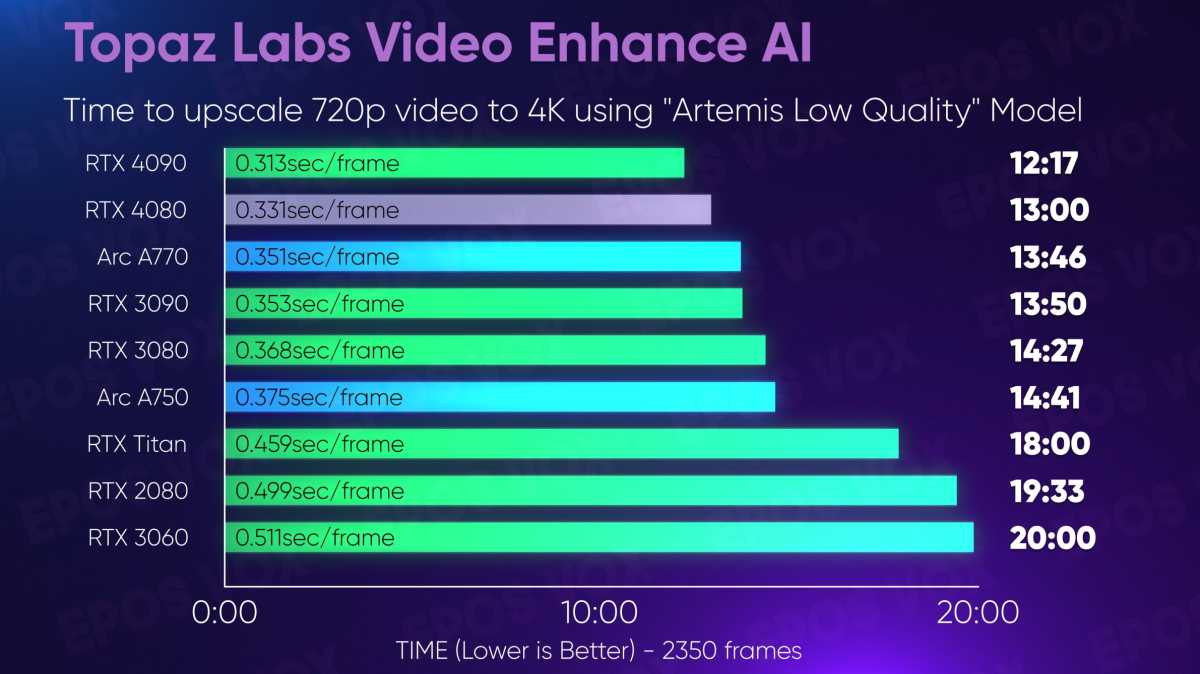

Upscaling video to 4K using Topaz Labs’ Video Enhance AI (720p upscaled to 4K using Artemis Low Quality), the GeForce RTX 4080 is a tad slower than the 4090, but still almost a minute faster than the RTX 3090. It’s still a little too slow for me to be comfortable using it on the regular for quick-turnaround projects, but we’re getting there!

Adam Taylor/IDG

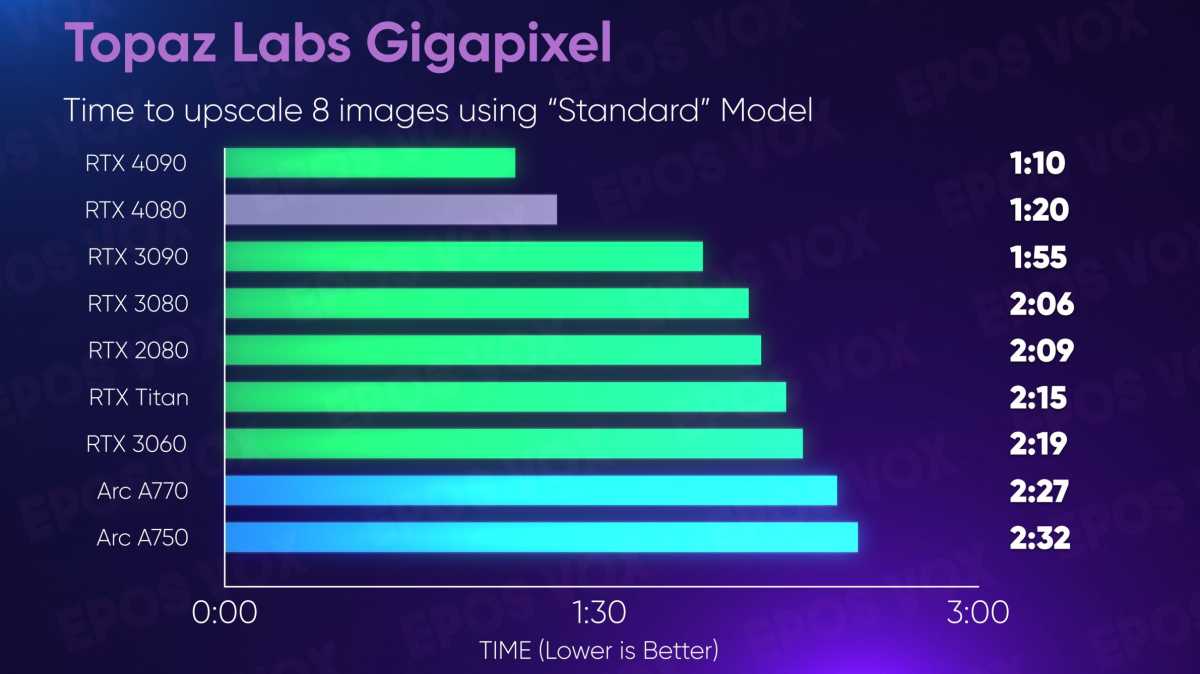

Similar results are found in Gigapixel. Upscaling 8 images shows the RTX 4080 running 10 seconds slower than the 4090, but still a fair bit faster than anything else.

Adam Taylor/IDG

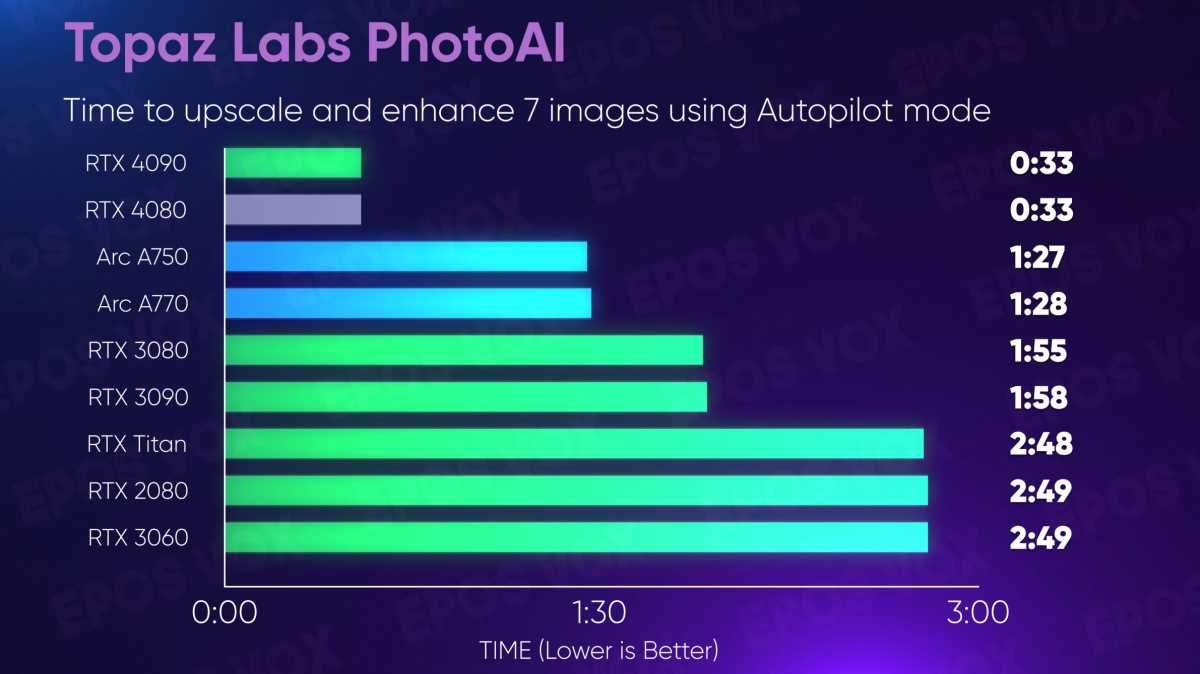

PhotoAI needed to be completely re-tested. Remember how terrible Nvidia cards performed in my RTX 4090 review? Apparently there was a big bug causing that, and Topaz has now fixed it. The RTX 4080 performs the same as the 4090 here, but both cards take less than a third of the time the older cards take. This is the kind of AI performance improvements I was expecting to see with Lovelace!

Adam Taylor/IDG

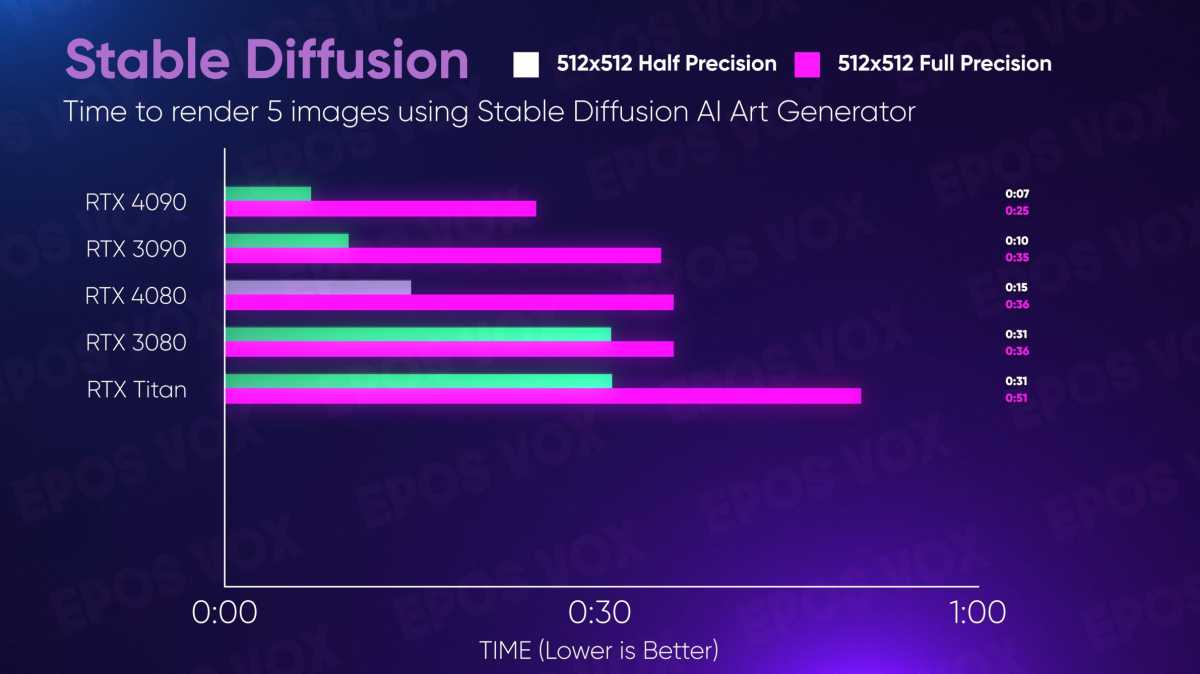

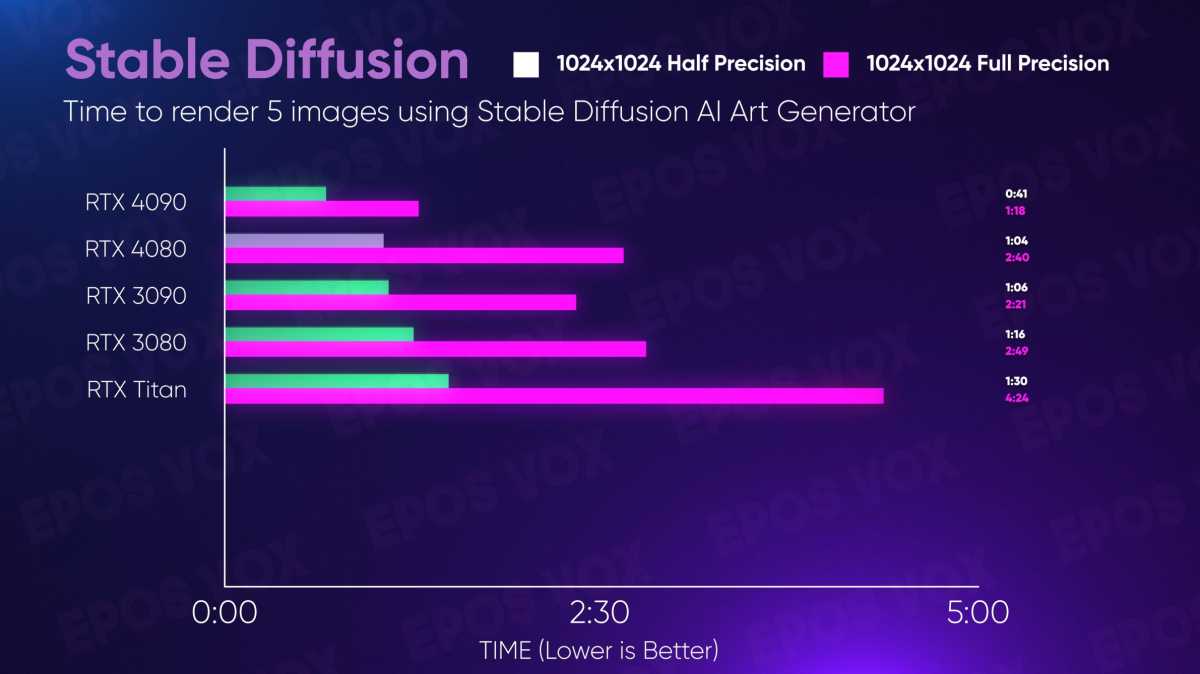

Generating AI art with Stable Diffusion shows the RTX 4080 being a little slower than the RTX 3090 in general, but still beating out the RTX Titan and 3080. I’m told by people in these communities that there’s probably some optimization that can be done in the apps for Stable to optimize specifically for Lovelace, so hopefully we see that soon. (Who do I send a card to?)

Adam Taylor/IDG

Adam Taylor/IDG

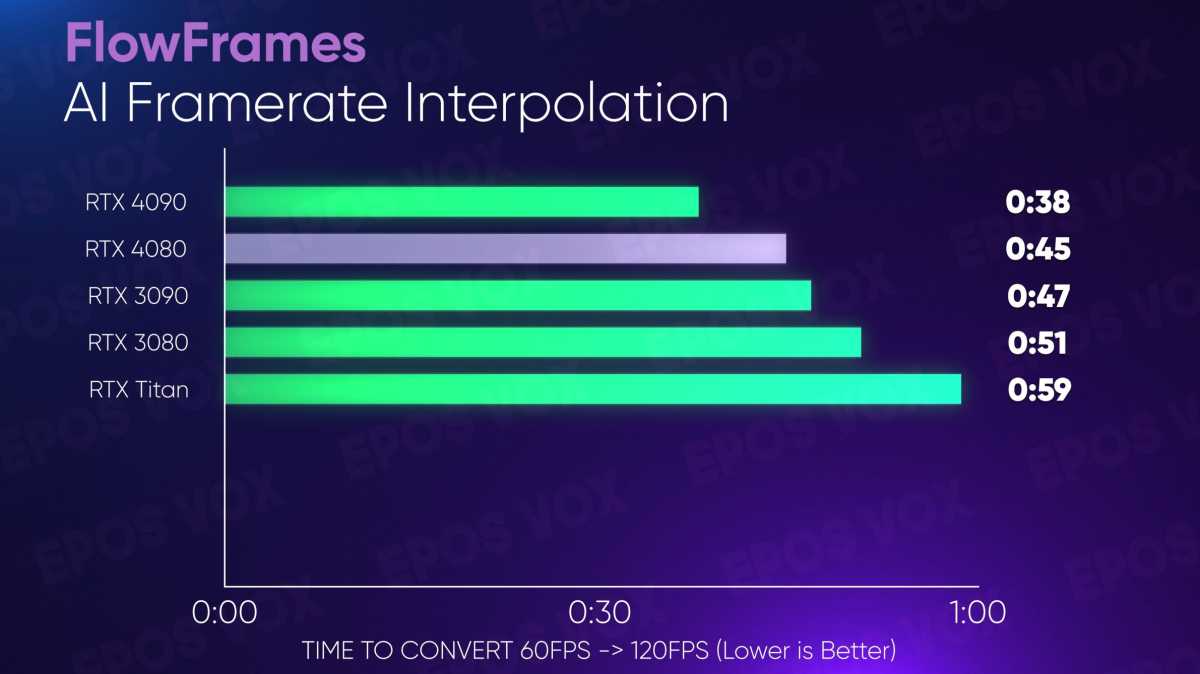

Using FlowFrames to AI interpolate a 60FPS video into 120FPS for slowmo, the RTX 4080 is just a little faster than the RTX 3090. Nice to see, but Lovelace has hardware built-in to do frame interpolation in real-time with DLSS3 Frame Generation—there simply isn’t any software support for this outside of games. (For video, this is a feature called FRUC.) I would love to see integrations with DaVinci Resolve for applying this to footage on the timeline, as well as in OBS Studio for smoothing out stuttery captures. A guy can dream, I guess.

Adam Taylor/IDG

3D & VFX

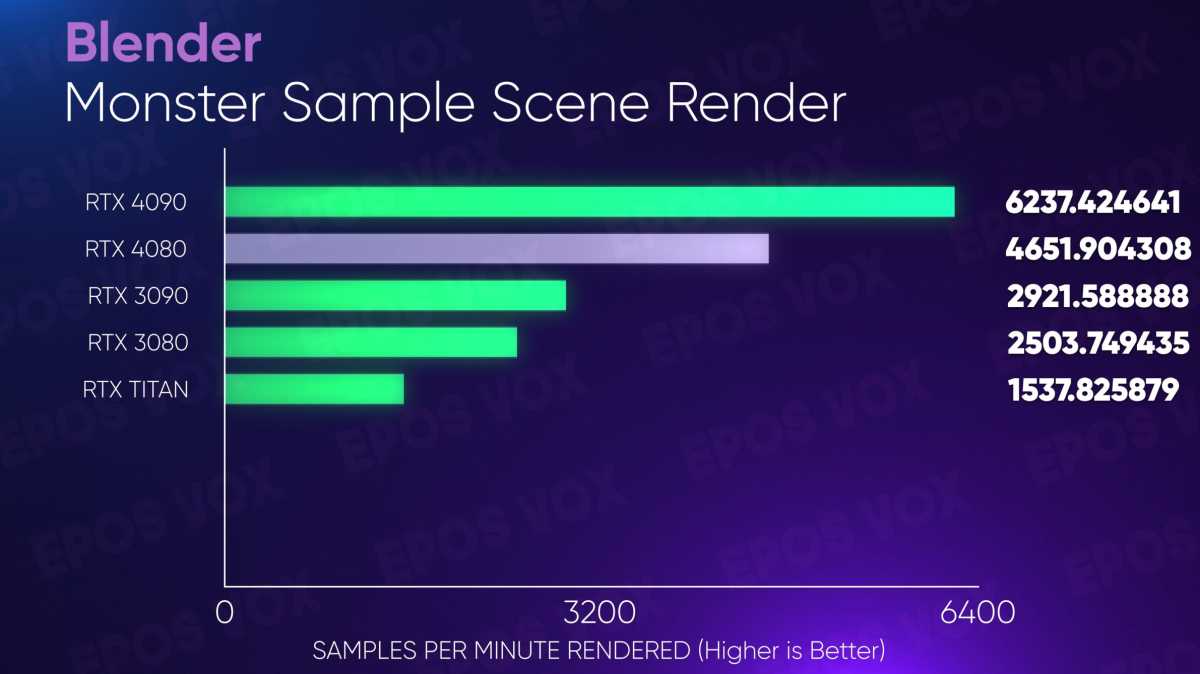

If you’re a 3D artist or VFX artist using 3D rendering, Nvidia’s GeForce RTX 4080 is no slouch, either. Now, the bigger, badder RTX 4090 put up insane numbers, doubling the 3090’s performance in ways that you can’t fully expect with this step-down GPU—but it’s close!

Adam Taylor/IDG

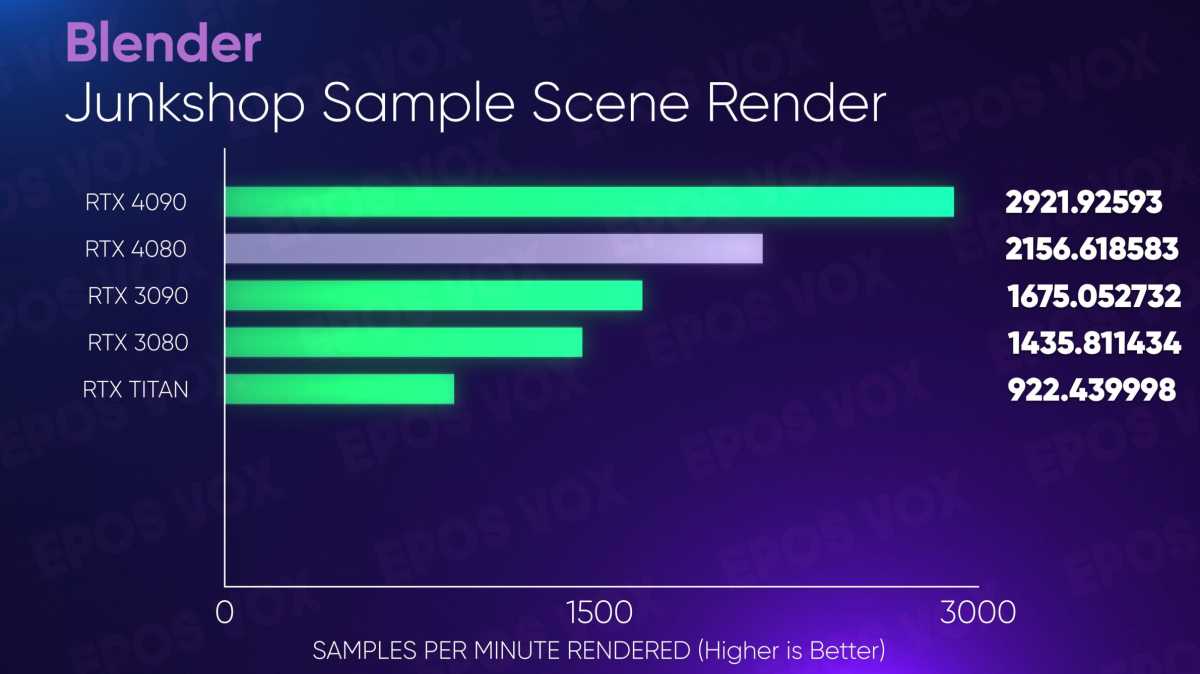

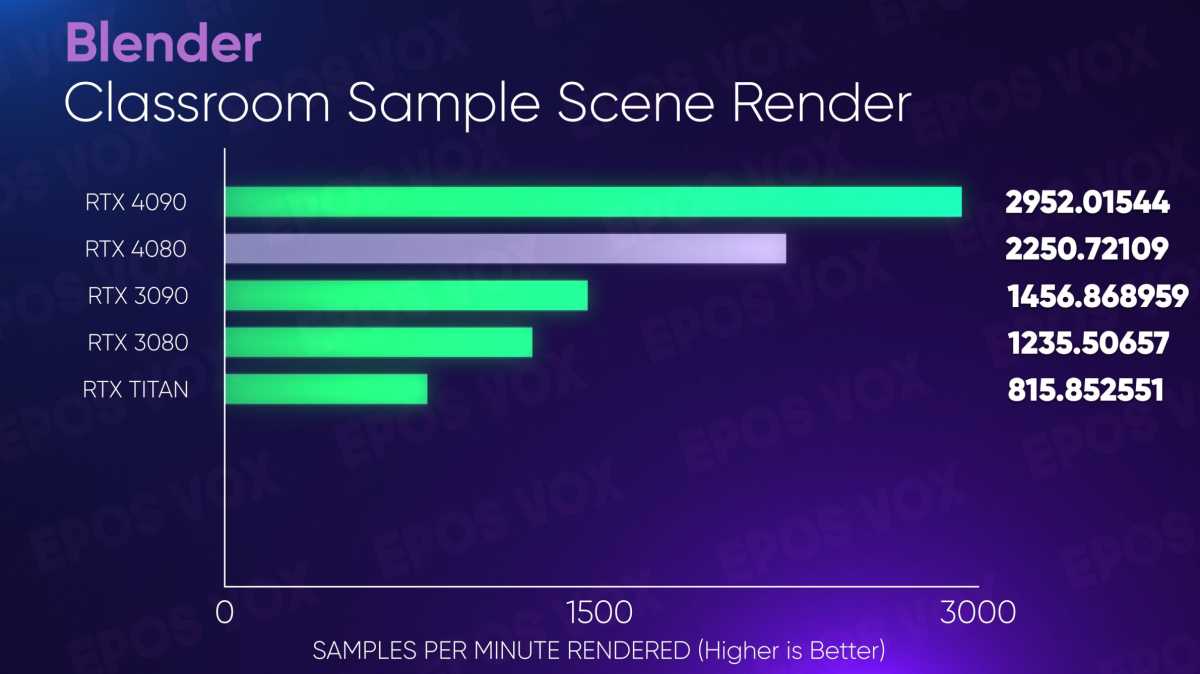

In Blender, benchmarking the Monster scene with the RTX 4080 nets about 60 percent faster speed over the RTX 3090 and 85 percent faster than the 3080. The Junkshop scene benched about 29 percent faster than the 3090 and 50 percent faster than the 3080, while the classic Classroom scene shows 55 percent faster performance than the 3090 and 82 percent faster than the 3080. So while the GeForce RTX 4080 was never going to be as absurdly fast as the 4090, these are some very good gains.

Adam Taylor/IDG

Adam Taylor/IDG

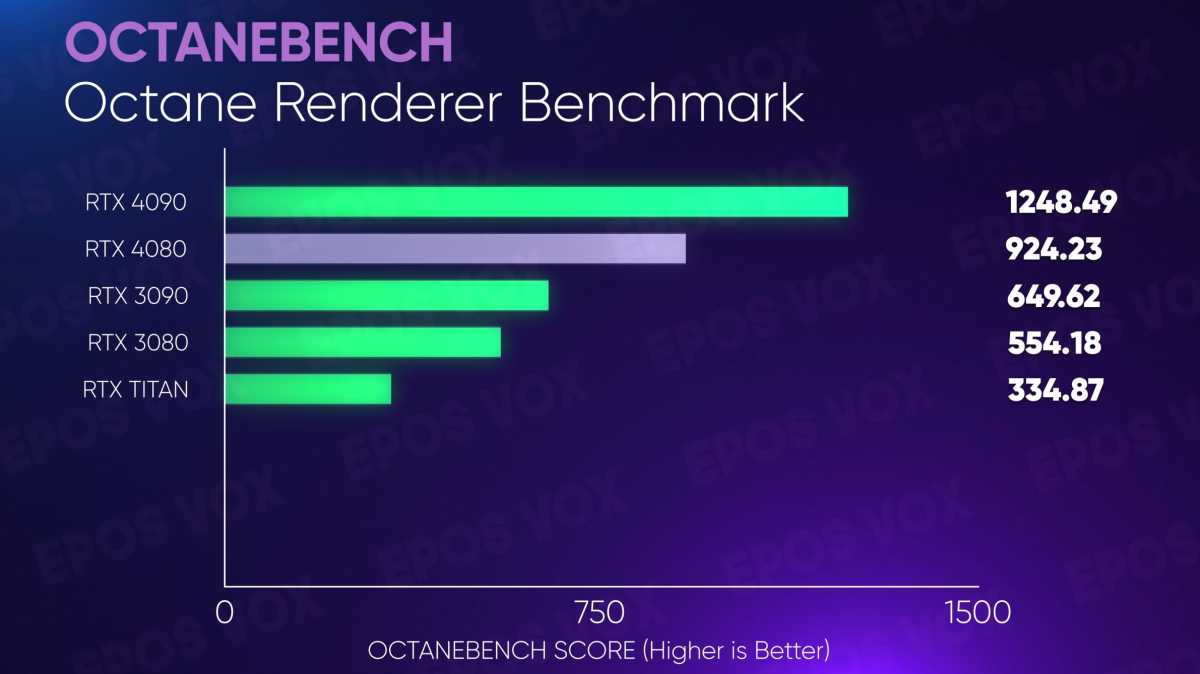

The trend continues into Octane, where the RTX 4080 performs 42 percent faster than the 4090 and 67 percent faster than the 3080. And just for kicks, if you’re still on the RTX Titan… nearly 3X the performance. I’m so sorry that card once cost $2500.

Adam Taylor/IDG

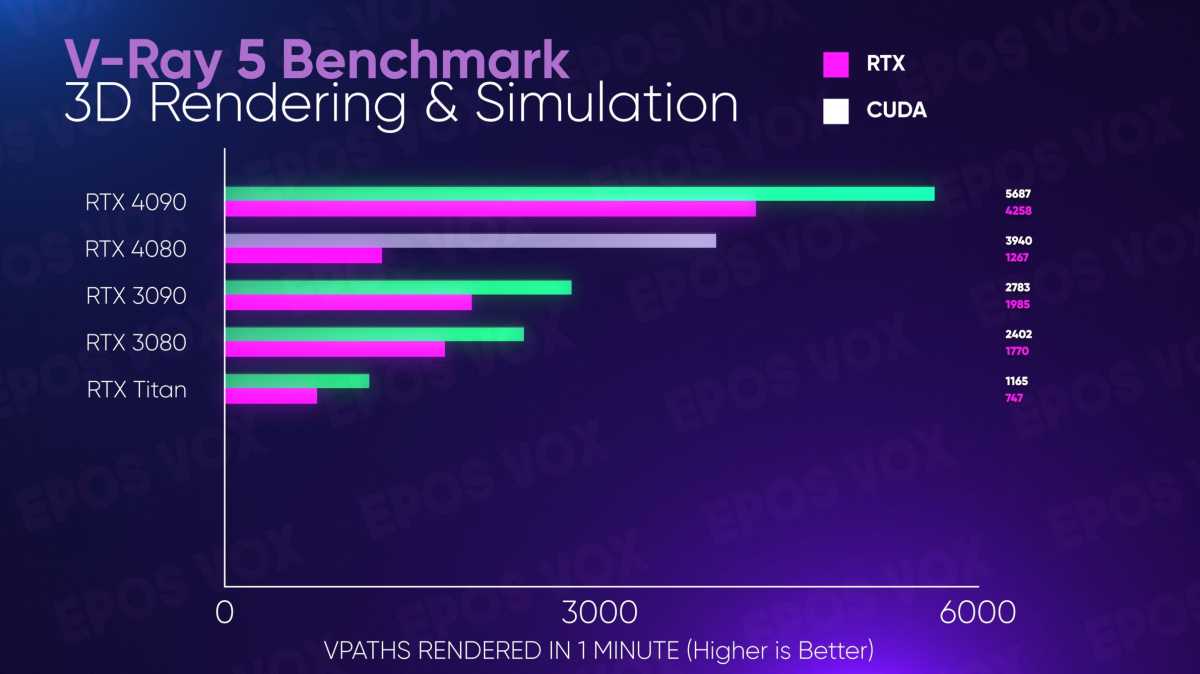

Testing the V-Ray engine with the VRAY 5 benchmark is an oddity. The VRAY RTX test shows the 4080 still trailing behind the RTX 4090 a fair bit, but leaping substantially over the older generation GPUs, but in CUDA? For some reason I’m consistently scoring below those older cards in performance. I can’t explain this, a bug, given all the other performance results we’re seeing here.

Adam Taylor/IDG

Streaming

All GeForce RTX 40-series cards have dual video encoder chips to allow for faster and higher quality 4K and 8K recording, as well as 8K 60FPS capture (Ampere caps out at 8K 30FPS). You can read more about this in the streaming section of my RTX 4090 review, but this has been fantastic for my workflow and has me putting 40-series cards in all of my capture and streaming rigs.

I’m recording most things to AV1 now to save on space. Resolve supports editing it without issue now, and the latest Nvidia driver update just improved AV1 decode performance, too! I’m utilizing the extra overall headroom to record my screen captures in H.265 in 4:4:4 chroma subsampling for lossless punch-ins for my tutorials and for beautiful 8K upscales on export. You can view a demo of this here—screen capture never looked so good, and I could not do this without risking frame drops and encoder lag even on my RTX 3090.

As with the RTX 4090, recording AV1 in OBS Studio 28.1 (which now supports NVENC AV1 in the public build) has very minimal performance impact on your gameplay, a mere fraction of the hit that H.264 causes. I’ll have more deeper testing on this coming later./y

Discord is also getting AV1 streaming support for Go Live later this month. I tested this and it looks great, but there’s some quirks to how Discord calls work. Since it’s a “video call,” video is sent directly from host to viewers, with no transcoding server or much of a middle-man as you would have with services such as Twitch and YouTube. This means that both parties have to have the same support.

So if you’re streaming AV1 to Discord and a viewer joins who doesn’t have the right driver or hardware (at this time I’m unclear as to whether it will require Nvidia cards for this update, or just GPU AV1 decode support in general, which would include AMD and Intel), the stream will be forced to revert to H.264. This could be confusing and messy for group calls but is still a big win to have it integrated. I just hope we see Arc and Radeon RDNA3 AV1 support added sooner rather than later—and ideally SVT-AV1 CPU encoding support, as it’s gotten pretty fast.

Bottom Line: Nvidia’s product stack is confusing

While it felt like a win for Nvidia to “unlaunch” the 12GB 4080—since it never should have been called a 4080 in the first place—doing so makes it so much harder to understand Nvidia’s upcoming product stack in context. The GeForce RTX 4080 out-performs the last-gen RTX 3090 in many cases, which seems like a win for $1,200, but you’re getting shorted on VRAM in ways that do start to matter for high-end work.

Just looking up to the $1,600 GeForce RTX 4090, the 4080’s performance checks out. But the cheaper 12GB… 4070? 4070 Ti? 4080 mini?… that will eventually hit the streets might be mostly just as good for this kind of work for much cheaper, given that the RTX 4080’s 16GB of VRAM still limits super-high-end work. But we won’t know until Nvidia’s next products break cover.

It’s hard to suggest buying anything right now until this painfully slow season is over—but waiting puts you in conflict with scalpers and shortages and FOMO. It’s tough. I was honestly hoping the “unlaunch” scenario would drive down the price of both RTX 4080 cards a bit, but I guess that’s not happening. Either way, if your particular content creation workflows don’t require more than 16GB of onboard VRAM, Nvidia’s GeForce RTX 4080 delivers some stunning performance improvements over its predecessors.