Hey you, cyberpunk wunkderkind ready to shift all the paradigms and break out of every box you can find. Do you want to run a super-powerful, mind-boggling artificial intelligence right on your own computer? Well you can, and you’ve been able to for a while. But now Nvidia is making it super easy, barely an inconvenience to do so, with a preconfigured generative text AI that runs off its consumer-grade graphics cards. It’s called “Chat with RTX,” and it’s available as a beta right now.

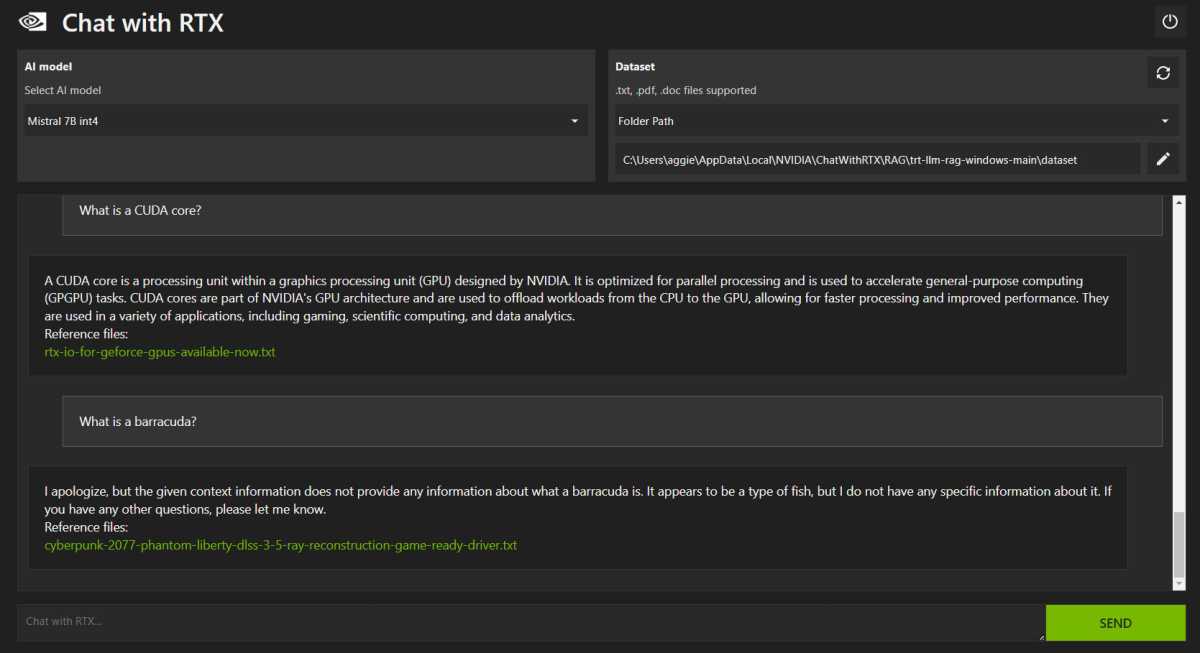

Chat with RTX is entirely text-based, and it comes “trained” on a large database of public text documents owned by Nvidia itself. In its raw form the model can “write” well enough, but its actual knowledge appears to be extremely limited. For example, it can give you a detailed breakdown of what a CUDA core is, but when I asked, “What is a baraccuda?” it answered with, “It appears to be a type of fish” and cited a Cyberpunk 2077 driver update as a reference. It could easily give me a seven-verse limerick about a beautiful printed circuit board (not an especially good one, mind you, but one that fulfilled the prompt), but couldn’t even attempt to tell me who won the War of 1812. If nothing else, it’s an example of how deeply large language models depend on a huge array of data input in order to be useful.

Michael Crider/Foundry

To that end, you can manually extend Chat with RTX’s capabilities by pointing it to a folder full of .txt, .pdf, and .doc files to “learn” from. This might be a little more useful if you need to search through gigabytes of text and you need context at the same time. Shockingly, as someone whose entire work output is online, I don’t have much in the way of local text files for it to crawl.

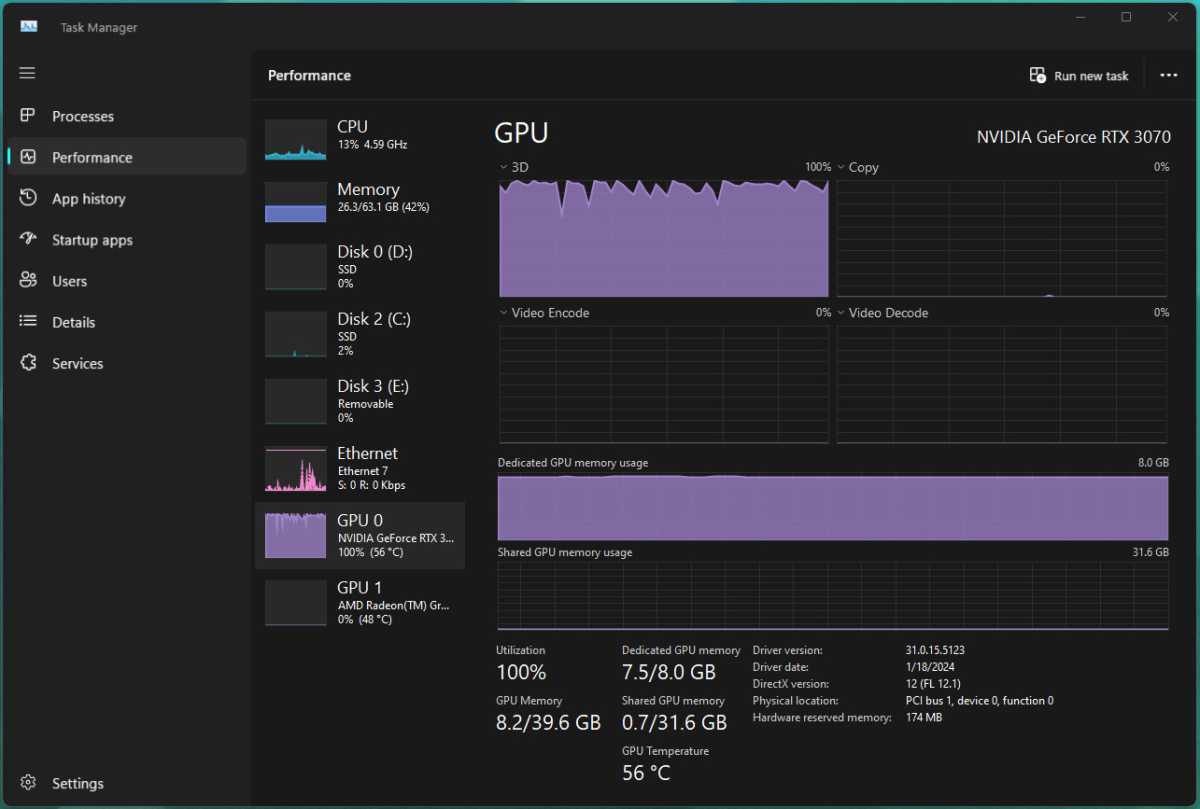

Pictured: My graphics card reading the Bible. Hard.

Michael Crider/Foundry

To try out this potentially more useful capability, I downloaded publicly available text files of various translations of the Bible, hoping to give Chat with RTX some quizzes that my old Sunday School teachers would probably be able to nail. But after an hour the tool was still churning through less than 300MB of text files and running my RTX 3070 at nearly 100 percent, with no end in sight, so more qualitative evaluation will have to wait for another day.

In order to run the beta, you’ll need Windows 10 or 11 and an RTX 30- or 40-series GPU with at least 8GB of VRAM. It’s also a pretty massive 35GB download for the AI program and its database of default training materials, and Nvidia’s file server seems to be getting hit hard at the moment, so just getting this thing up on your PC might be an exercise in patience.