It’s probably fair to say that the Generative Fill feature within Adobe Photoshop has utterly transformed my photo-editing workflow. Today, Adobe just made it better — and spun off several additional features that complement Generative Fill, too.

Adobe added what it called the “Firefly Image 3 Model” to its Firefly image-generation service as well as to Photoshop — at least the beta. Firefly is a text-to-image generator, trained on Adobe stock imagery to avoid complaints that it’s been trained on artists’ work without permission.

It’s difficult to show exactly how Firefly Image 3 differs from earlier models, but this is what Adobe has to say: “This combination delivers considerably better photographic image quality, precise controls to inform your outputs, prompt comprehension to understand complex descriptions, and generation variety to explore different results.”

How I use Generative Fill

You might wonder why a journalist would use AI to alter images. It’s true that I use it sparingly; one of my favorite features within the Windows’ Photos app has been Spot Fill, which uses AI to get rid of any spare specks of dust which might coat a laptop or USB-C hub. That’s now been replaced by Generative Erase.

The broader point is that we need art to illustrate our stories, when art isn’t always available. My editors would prefer that I use real images of products, or logos. But those might be just tiny images tucked away on a website or within a PowerPoint presentation. Could I enlarge them? Sure. But that might make them look grainy or odd. What to do?

In this case, I’ll take a chip image or logo, “remove” it from the presentation, then expand the size. Here’s where Generative Fill comes in: Photoshop’s “crop” tool can actually “expand” the boundaries of an image, which allows me to effectively enlarge the total scene. (To be accurate, Photoshop calls this particular feature Generative Expand.)

Most readers aren’t going to care about the background of an image, as long as the product is portrayed accurately. Generative Fill allows me to create a neutral though unique background in case I need it.

All I need to do is “crop out” from an image and let Generative Fill/Expand do its work. Counterintuitively, I do not enter a prompt; I just let it extend the image.

The other point, too, is that Generative Fill works quickly and accurately. I don’t have to “lasso” an object and maneuver it around, hoping I’ve captured every pixel accurately. Photoshop’s AI understands what (if anything) it needs to remove, and generates a replacement. It’s important to note that I and other editors still frame shots of say, laptops with an eye toward simply cropping down an existing backdrop, rather than replacing it with something that’s artificially generated. (Teams, Zoom, Google Meet and others do that on video calls!)

What’s new in Photoshop in April 2024: AI, AI, AI

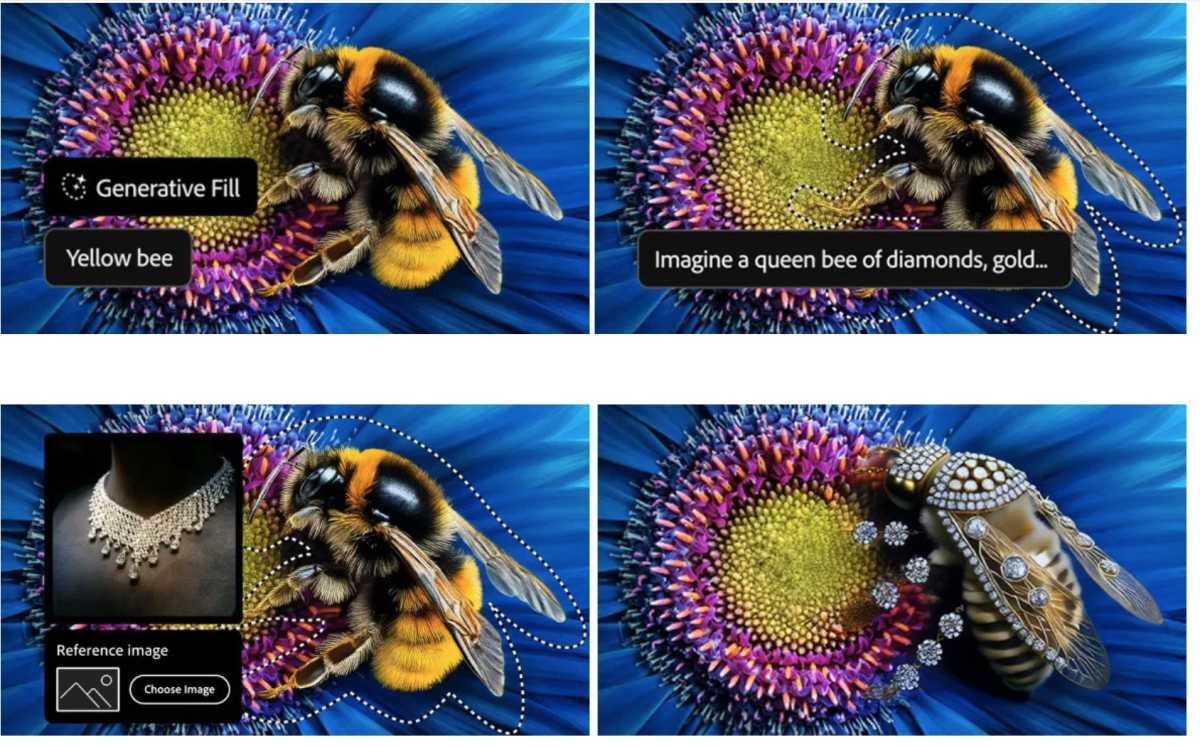

Generative Fill does allow you to specify what Photoshop fills in, such as adding a castle to the background of a beach scene. But it’s always had to work from what it guesses from your prompt. Even suggesting three options can be unsatisfying.

Now, Generative Fill can work from a reference image that you specify, which makes sense: “Make it look like this” is a perfectly valid way of describing something.

Adobe

There’s more. When Adobe’s Generative Fill suggests new AI art, it gives you a choice of three options. Generate Similar works like Reference Image: it allows you to use one of the suggestions as a reference image, and iterate on that.

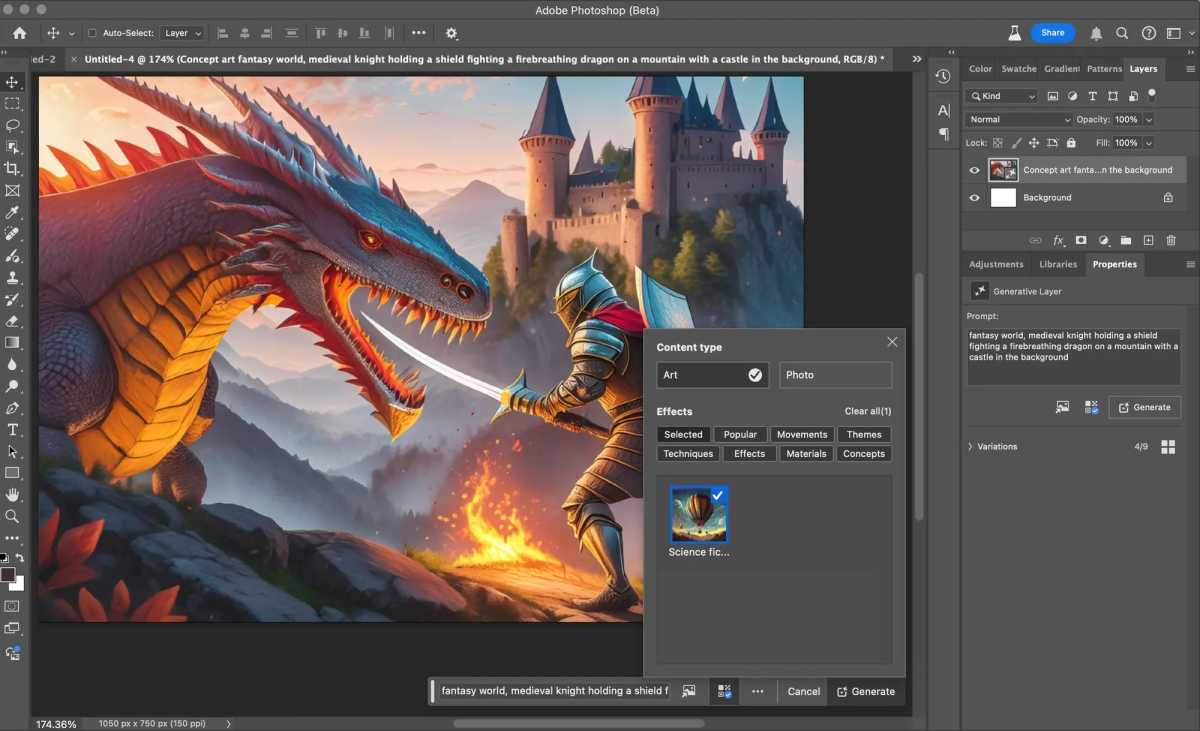

But it’s also true that Adobe has wanted you to use Photoshop to edit an image, not create one wholesale. (You could already do that in Firefly, but would then have to import it. You couild also use Generative Fill to add elements to an existing image.) Now you can go to Generate Image in the Contextual Task Bar on a blank canvas or to Edit > Generate Image on the Tools panel and start from scratch.

Adobe

This feels overdue. Otherwise, this looks like many of the other image generation tools on the market. Photoshop even adds “upscaling,” of a sort, through a feature called Enhance Detail. Essentially, this uses AI to produce sharper images.

Finally, Photoshop also allows you to remove and then generate a new background. Essentially, it’s removing the steps of selecting a foreground subject, removing it, creating a new background mask, and adding it in.

For years, I hesitated to use Photoshop for two reasons: one, I wasn’t sure how much I should edit reality, and two, it was so dang complicated time-consuming to use. Photoshop’s AI additions have been a game-changer in making its tools faster, more efficient and more accessible.

Updated at 2:53 PM to clarify Generative Fill versus Generative Expand.