Imagine a world where video streaming could have significantly higher quality without using up more bandwidth—or have the same quality while having half the impact on your data caps. A world where Twitch streams aren’t a blocky mess and YouTube videos can actually resemble the visual experience of playing the game yourself.

Adam Taylor/IDG

That world is the future promised by AV1: A new, open source video codec that aims to dethrone H.264 as the primary video standard after its nearly 20 year-long reign. Typically very difficult to encode, real-time AV1 encoding is now available to consumers via Intel’s debut Arc Alchemist graphics cards (though the launch has been limited to China so far, with a U.S. release planned for later this summer).

I put Intel’s GPU encoder to the test using a custom Gunnir Arc A380 desktop card to see if it delivers the promises we’ve seen from AV1 thus far, and how it competes against the existing H.264 encoders typically used for live streaming. I also want to explain why all of this matters so much. There’s a lot to this, so let’s dig in.

Further Intel Arc reading:

- Meet Xe HPG, the beating heart inside Intel’s first graphics card

- Inside Intel’s Arc graphics plans: “We’re taking a completely different approach”

- Tested: Arc A370M, Intel’s first discrete GPU to seriously battle Nvidia and AMD

- The Full Nerd Special Episode: Intel stops by to talk (and play) Arc graphics cards

- The best graphics cards for PC gaming: You can finally buy GPUs again!

A future of open media

Before H.264 became the predominant video codec everywhere, online video was a mess. I have many fond memories of my Windows 98 and Windows XP computers being plagued with all manner of video player apps from QuickTime to RealPlayer to the DivX/Xvid players of dubious legality, all to play AMVs or game trailers downloaded from eMule or LimeWire. Then once YouTube started gaining popularity, we all had to deal with .FLV Flash Video files. It was hard to keep up. H.264 taking over and be accepted by nearly every application, website, and device felt like magic. But as the years (and years) have passed and video standards aim at higher resolutions and higher framerates, demand increased for video codecs that operated with higher efficiency.

While H.264 was made effectively royalty-free, H.265 still has a lot of patents and licensing costs tied up in it – which is why you don’t see many consumer applications supporting it, and virtually no live streaming platforms accept it.

YouTube and Netflix switched over to almost exclusively using VP9 (Google’s own open source video codec) but again adoption in the consumer application space has been virtually nonexistent, and it seems video streaming giants still desire even higher efficiency.

Adam Taylor/IDG

That’s where the Alliance for Open Media comes in. AOM is a collaborative effort to develop open-source, royalty-free, and flexible solutions for media streaming. Backed by basically every big corporation involved in web media including Google, Adobe, Nvidia, Netflix, Microsoft, Intel, Meta, Samsung, Amazon, Mozilla, and even Apple, AOM’s focus is on creating (and protecting via a safe patent review process and establishing a legal defense fund to keep the tech open) AV1, with AV1 being an ecosystem of open source video and image codecs. Tools for metadata and even image formats have been developed, but what I’m focusing on here is the AV1 Bitstream video codec.

It may seem strange for so many big corporations (and competing ones at that) to be working together on a singular project, but ultimately this is an endeavor that benefits all of them. Lower bandwidth costs, higher-quality product, and easier interoperability for whatever the future of streaming media might hold seems to beat out the benefits of prior philosophies around everyone developing their own walled-off solutions.

My only personal concern here is with regards to many of these corporations’ histories of pushing anti-consumer DRM when it comes to video both online and offline, and how those past actions might influence AV1 implementations.

AV1 in the wild

Adam Taylor/IDG

All this is great, but how do you actually get AV1 videos? Keeping in mind that new codec adoption is typically very slow and AV1 is moving very quickly, all things considered, you can actually watch a fair bit of AV1 online right now.

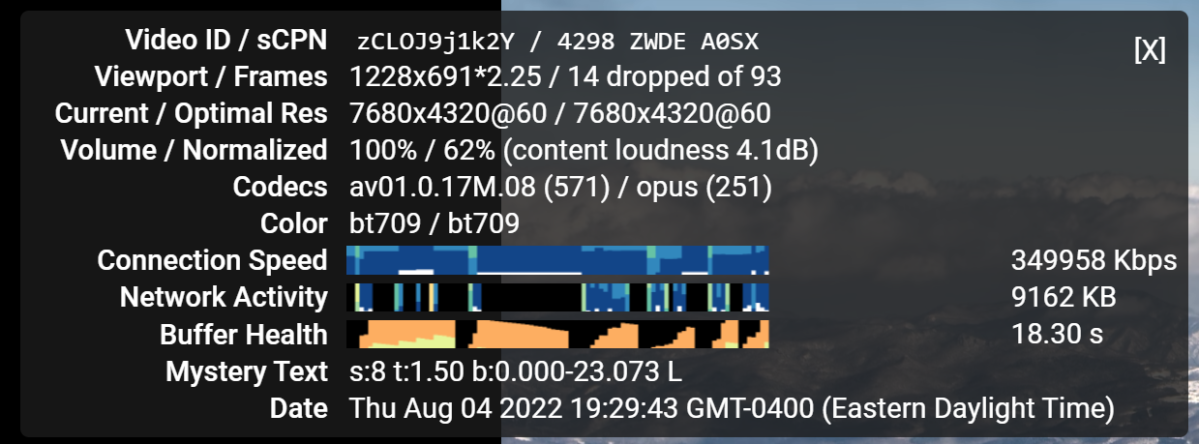

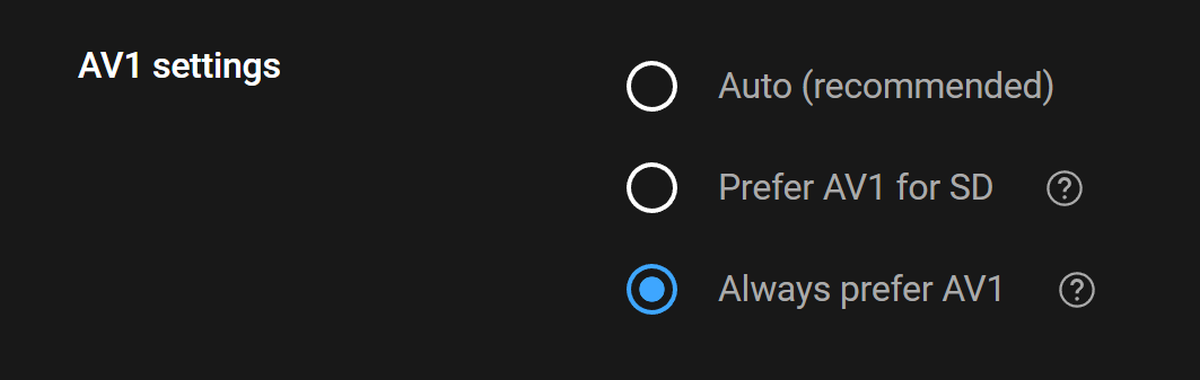

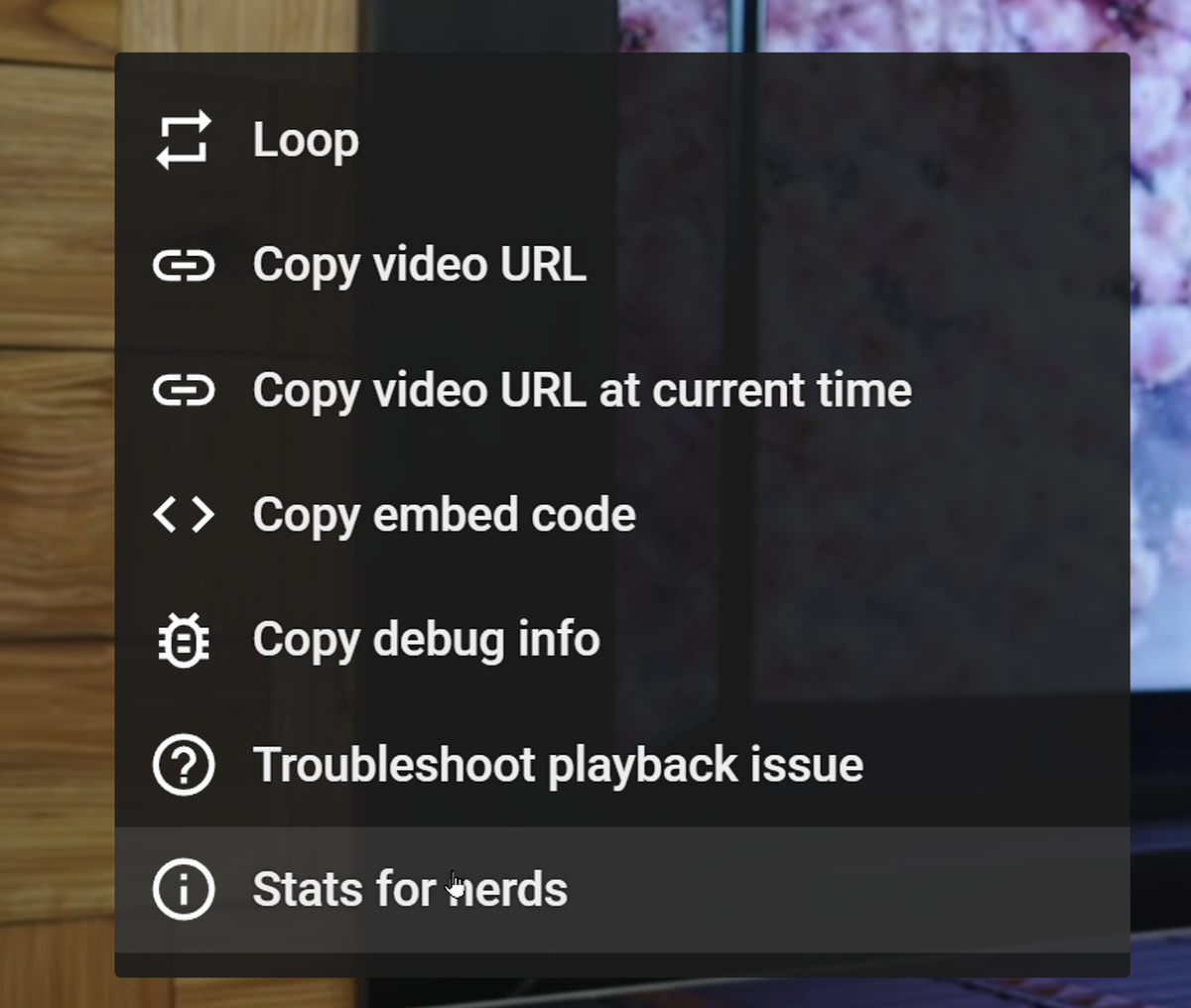

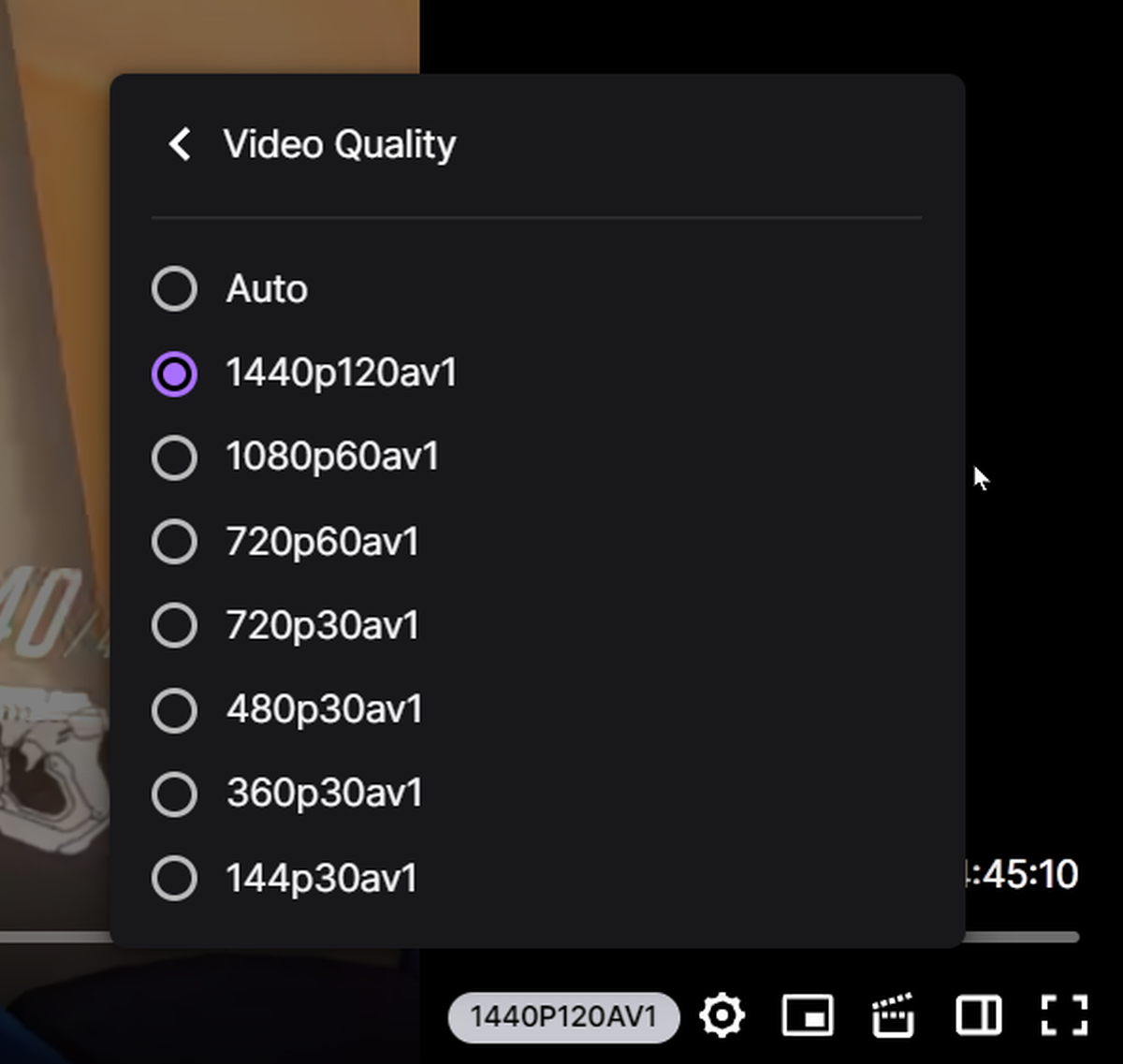

Starting with YouTube, you should go to your YouTube Playback Settings (while logged in) and choose “Always Prefer AV1” to increase your chances of actually being delivered AV1 transcodes of videos. From there, any video at 5K resolution or higher should already have AV1 transcodes ready to play. YouTube also created this “AV1 Beta Launch Playlist” back in 2018 with some sample videos that were originally given AV1 copies for some guaranteed testing. Anecdotally, I’ve been seeing more and more high-traffic videos that I watch on a regular basis playing back in AV1.

Adam Taylor/IDG

The video won’t show any different for you in the video player, but if you right-click the video while it’s playing and click “Stats for nerds” you should see “av01” next to “Codecs” if all is working properly.

Adam Taylor/IDG

Actually being able to decode AV1 can be a mixed bag. Most modern quad-core PCs from 2017 or later shouldn’t have any issue decoding 1080p AV1 footage on CPU. But once you go beyond 1080p, you’ll want hardware-accelerated decoding. Nvidia’s GeForce RTX 30-series graphics cards support AV1 decode (such as the RTX 3050) as do AMD’s Radeon RX 6000-series GPUs and even the iGPUs on Intel 11th Gen and newer CPUs. If you have this hardware, make sure your drivers are up-to-date and download the free AV1 Video Extension from the Microsoft Store, then refresh your browser. Depending on your graphics hardware, you’ll even be able to see the “Video Decode” section of your system doing the work in Windows Task Manager. (Note: All of this is on Windows 10 and 11 only, Windows 7 is not supported.)

Netflix is already streaming AV1 for some films to compatible devices as lots of TVs, game consoles (including the older PlayStation 4 Pro), and some mobile devices already support it. Netflix’s streams are proving to be a wonderful showcase for AV1’s “Film Grain Synthesis” feature — the ability for the encoder to analyze a video file’s film grain, remove it to cleanly compress the footage, and then provide instructions to the decoder to re-create it faithfully, without wasting unnecessary bits on the grain.

Twitch’s former Principal Research Engineer Yueshi Shen also shared an AV1 demo with me back in 2020 showcasing what AV1 could bring to Twitch streaming. Available here, you can view mostly blocking-free gameplay in 1440p 120FPS delivered with only 8mbps of bandwidth, 1080p 60FPS using only 4.5mbps, and 720p 60FPS using only 2.2mbps. While not a real-world live streamed test, it’s still seriously impressive given the terribly poor quality normal H.264 encoders would produce at those bitrates in normal Twitch streams.

Adam Taylor/IDG

Shen originally projected Twitch to have full AV1 adoption by 2025, with hopes of big name content streaming it as early as this year or 2023. Hopefully with consumer-accessible encoders now available, they can start turning on these new features very soon.

Did Intel deliver?

AV1 encoders that run on the CPU have been available for quite a while now, but they’ve been very difficult to run, taking many hours to process through a sample even on high core count machines. Performance has been improving steadily over the past couple years and two encoder options (SVT-AV1 and AOM AV1) are now available for use as of OBS Studio version 27.2. As I covered when the OBS update released, these are still tough to run in real-time, but they are usable and the first step towards consumer AV1 video.

Most of the streaming and content creator scene have been waiting on hardware-accelerated encoders to show up on next-generation graphics cards, instead. As mentioned, Intel, AMD, and Nvidia all added hardware AV1 decoders to their previous generation hardware, and it’s an assumed-certainty that at least Nvidia will have an AV1 hardware encoder on RTX 4000 hardware, if not AMD with RX 7000 as well. However — and despite many delays of their own — Intel is first to market with GPU AV1 encoders on the new Arc Alchemist line of graphics cards.

Adam Taylor/IDG

The first thing I wanted to investigate with the new A380 GPU was how well the AV1 encoding actually held up versus currently-available options. While AV1 as an overall codec is incredibly promising, the results you get from any codec depends on the encoder implementation and the sacrifices necessary to run in real-time. GPU encoders run on fixed-function hardware within the GPU, allowing for video encoding and decoding with minimal impact to normal 3D workloads, such as game performance, but don’t always produce “ideal” conditions.

Right-click and open in new tab to see in full resolution.

Adam Taylor/IDG

I’ve previously covered how different hardware H.264 encoders have improved through iterative GPU generations where it can be seen that early implementations of encoding with Nvidia’s NVENC, for example, did not produce anywhere near as high of a quality as their newer cards can. I also recently examined how encoder quality can be somewhat improved through software updates (such as AMD’s recent updates to their AMF encoder). All of this means that while there’s plenty of room to be hyped for the first accessible AV1 encoder, there’s also room to be disappointed by the very first iteration of it, with many more to come.

The most reliable way I’ve found to quantitatively measure video quality has been Netflix’s VMAF – Video Multi-Method Assessment Fusion. This is an algorithm that assesses video quality in a way that very closely matches what actual human viewers feel about video quality at a given distance and size, rather than relying on pure noise measurements such as with PSNR. Netflix has been building this technology (and blogging about it) for quite a few years now, and it’s gotten to a point where it very reliably measures differences that help illustrated what I would typically try showing you with repetitive side-by-side screenshots.

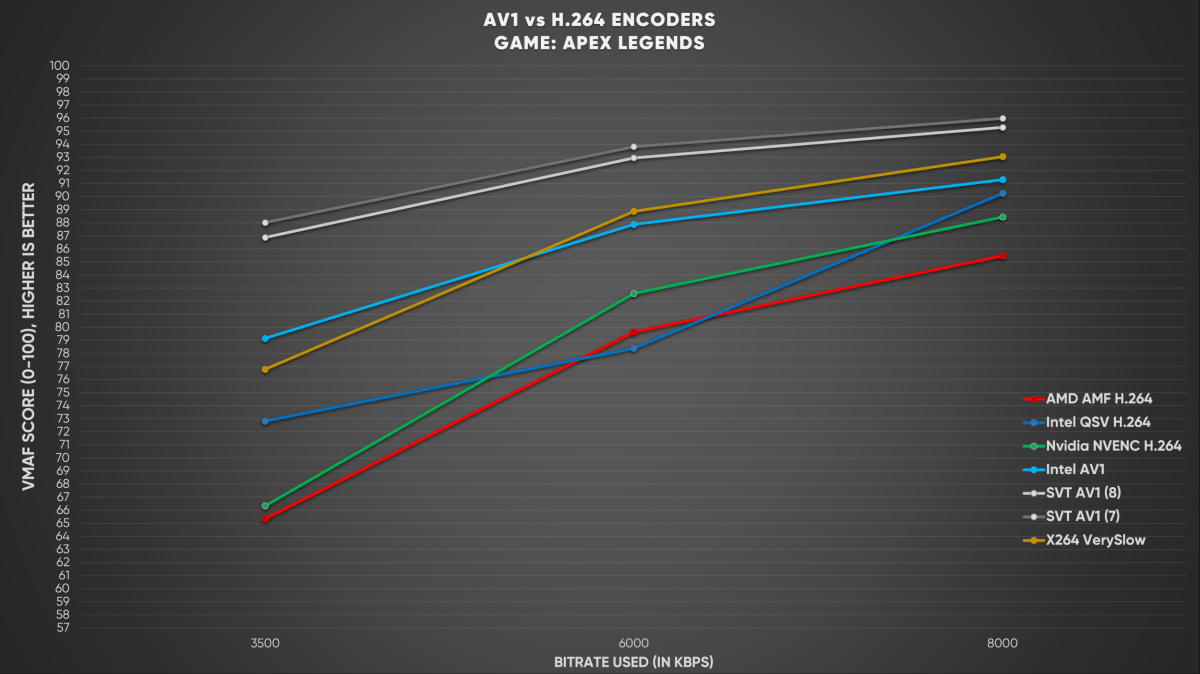

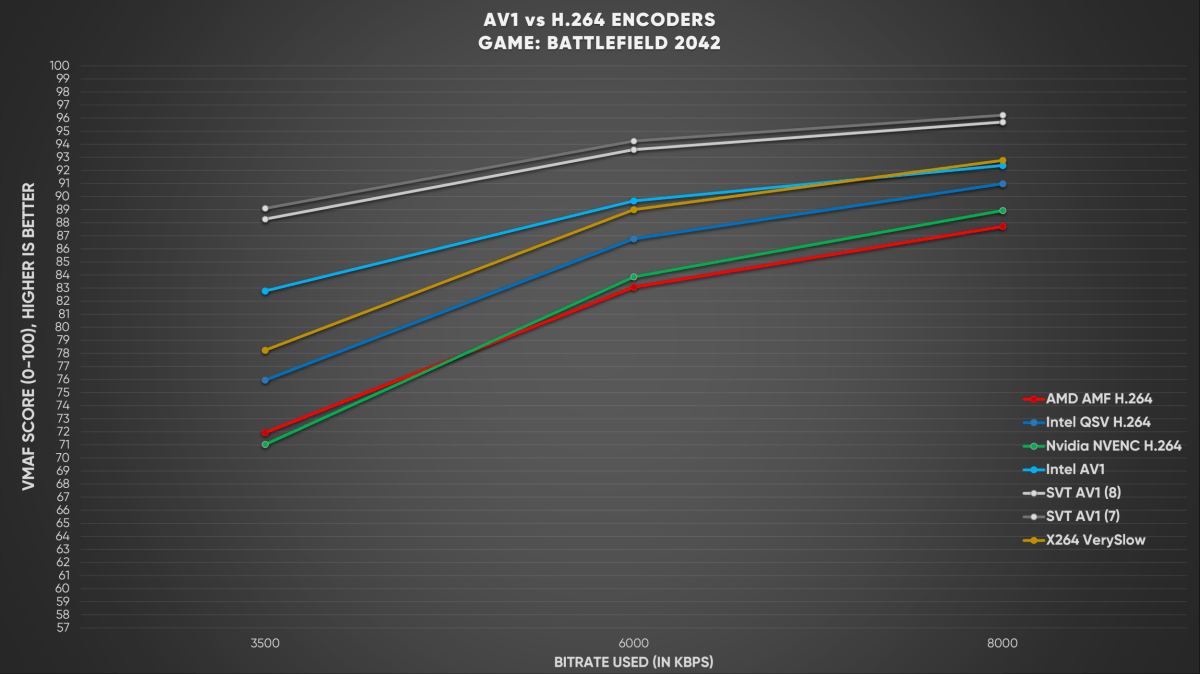

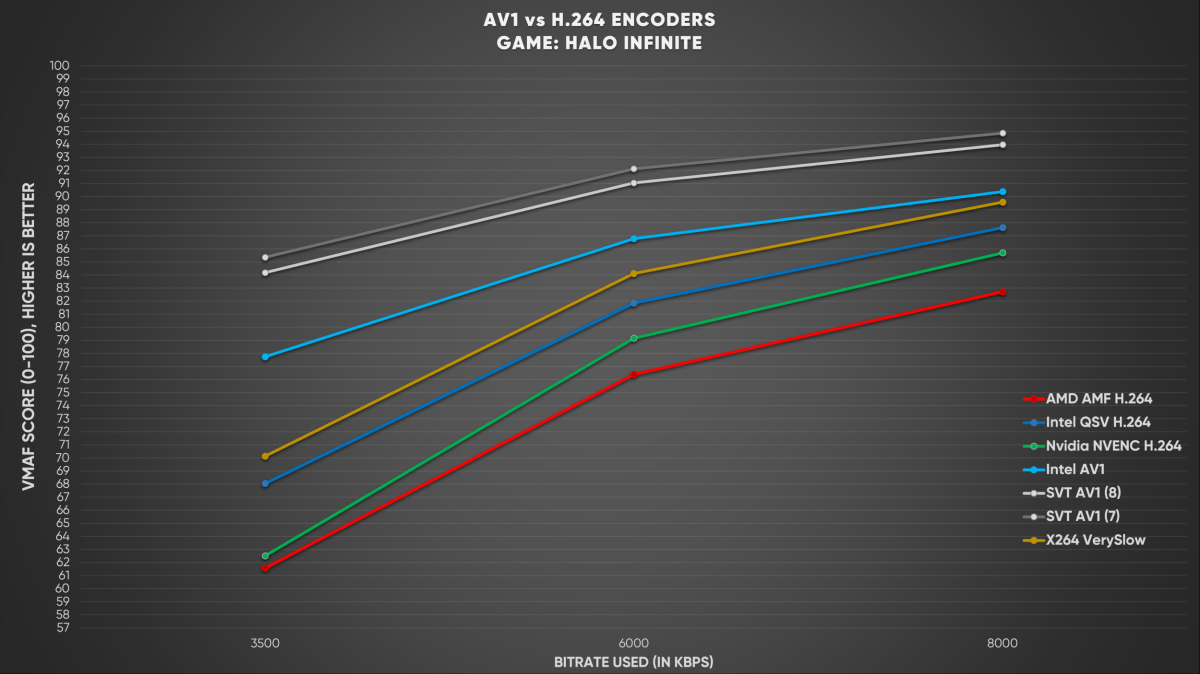

I’m constantly testing a massive library of lossless gameplay and live action video samples, but for ease of presentation, I want to focus on a few games in the FPS genre. The fast-paced camera movements, highly-detailed graphics with lots of particle effects, and many HUD elements combine to provide a kind of “worst case scenario” for video encoding, as just about every Twitch streamer has struggled with.

My focus is on 1080p 60FPS video, with three primary bitrates: 3500kbps, 6000kbps, and 8000kbps. 3500kbps (or 3.5mbps) is the lowest that would typically be advised to ever use at 1080p (at least, prior to AV1), 6000kbps is the soft “cap” for Twitch streams, with 8000kbps being an unofficial bandwidth cap that many are able to send to Twitch without issue. Twitch is the focus here due to the site providing “Source” quality streams that don’t go through the secondary stage of compression that YouTube streams do, which removes some of the benefits of higher-quality encoders at lower bitrates.

Right-click and open in new tab to see in full resolution.

Adam Taylor/IDG

Here, I compare the usual hardware H.264 encoders from Intel, Nvidia, and AMD against the CPU X264 encoder running in the “VerySlow” CPU usage preset — something that can’t be run in real-time, but is often considered the benchmark for quality to aim for — as well as Intel’s AV1 GPU encoder and two SVT-AV1 encode presets that one could “reasonably” (on a high-end CPU like Threadripper) encode in real-time.

These results are, well, fascinating.

Right-click and open in new tab to see in full resolution.

Adam Taylor/IDG

At 6 and 8mbps, Intel Arc’s AV1 encoder goes back and forth between scoring higher than X264 VerySlow and scoring slightly lower (VMAF scores operate on a 0-100 scale with 100 being a perfect match for the lossless/uncompressed source material), and still scoring impressively higher than even the best GPU H.264 encoders. On its own, this is already impressive enough. If you’re a game streamer and you use a dual PC streaming setup or have the PCIe lanes and slots to add a second GPU to your machine, once AV1 is enabled on Twitch you would be able to stream significantly higher-quality streams than most of what’s on the entire website, at the same bitrate.

But if we look over at the lower 3.5mbps bitrate, Intel’s AV1 encoder soars above any of the H.264 encoders, including X264 VerySlow. And in some of the game tests, AV1 encoded on the Arc A380 at 3.5mbps scores higher than most of the H.264 options do at 6mbps (nearly double the bandwidth).

Right-click and open in new tab to see in full resolution.

Adam Taylor/IDG

Theoretically, if Twitch enabled AV1 streaming right now, a streamer using an Arc A380 to encode their broadcast would enable everyone involved in this process to cut their bandwidth in half — the streamer themselves, the viewer, and Twitch/Amazon — without taking a hit in quality. It also means you can immediately get a jump in quality without changing any network requirements on the streamer’s end.

Moving on from data to actual real-world visuals, the results are still just as impressive.

At 6 and 8mbps, Intel’s AV1 encoder presents “more of the same” at a glance compared to H.264 encoders. There’s a tiny amount of added sharpness, but not enough to stand out, but a very noticeable lack of blocking or artifacting in areas with big light changes or shadows.

Right-click and open in new tab to see in full resolution.

Adam Taylor/IDG

Again, you won’t be blown away comparing results at these higher bitrates, but it is an improvement. AV1 seems to do a really great job at more smoothly blending together areas where detail has to be sacrificed, rather than creating pixelated-looking blocking that you’re used to seeing. Sometimes at a certain distance you might even feel a section of the H.264 footage looks “sharper” than the AV1 footage due to the extra “crunch” giving the illusion of added detail, but zooming in, that detail isn’t actually there.

Right-click and open in new tab to see in full resolution.

Adam Taylor/IDG

At a mere 3.5mbps, Intel’s AV1 encoder does start to exhibit a small amount of macro-blocking in gradients, but avoids it during detailed sections of the screen compared to H.264, and really delivers a view you wouldn’t think would be possible at such a low bitrate.

Looming competition

My VMAF graphs also included a couple additional data points that scored significantly higher than Intel’s AV1 encoder. These are two encodes using the SVT-AV1 CPU encoder. This encoder uses “Presets” (similar to X264) numbered in such a way that the smaller numbers are more difficult to encode with higher quality (akin to going slower with X264), and higher numbers are easier with worse quality. In my testing, even on a 32-core Threadripper CPU, 8 and 9 were the only realistic presets to encode with in real-time. So keeping with my theme of slightly-unobtainable benchmark quality, I included SVT presets 7 and 8 on my graphs.

Right-click and open in new tab to see in full resolution.

Adam Taylor/IDG

And as you can see, while there’s marginal differences between these two specific presets, they both out-score Intel’s AV1 encoder by a long shot. Much how X264 VerySlow far outperforms any of the GPU H.264 encoders, this is to be expected.

I thought it was important to include these for two reasons. First, if you’re just encoding videos for upload to YouTube, archives, and so on, you can use these slower encoder profiles with AV1 and exponentially increase your bitrate efficiency (saving on space and upload times, or increasing quality for the file size you would have already committed to). Secondly, I’m hoping it’s a preview of what we have to look forward to from GPU AV1 encoders over the next couple years.

While Intel’s QuickSync Video H.264 encoder (on both the Arc GPUs and 12th Gen iGPUs) is currently leading Nvidia and AMD in quality, previous generations lagged behind Nvidia (and even AMD if we go back far enough) which means if Nvidia launches RTX 4000 with an AV1 encoder, it could perform at least somewhat better than Intel’s offering. Plus, as mentioned, this is just the first iteration. As hardware improves, so will the encoder. I really want Nvidia and AMD to compete on the AV1 encoder front, delivering users even higher quality – but I have to say I’m pretty stoked with where our starting line is here in Intel’s Arc graphics cards.

The future of video streaming is very bright, and significantly less blocky. I’m uploading all of my YouTube videos in AV1 moving forward and look forward to streaming in the new format as soon as platforms allow.

[Disclosure: My Gunnir Photon A380 graphics card unit was sampled to me by Intel for inclusion in my usual encoder quality analysis content on my YouTube channel. I was not paid by Intel for any coverage, am under no obligation to say anything specific, and Intel has not seen anything I post about the GPU prior to publishing. I’ve also been sent GPU samples by Nvidia and AMD for this same purpose.]