Waymo’s plan to launch a transportation service with self-driving cars in Phoenix, Arizona, later this year has a bit more riding on it than usual. Phoenix is just 10 miles from Tempe, Arizona, where a self-driving test car from rival Uber killed a pedestrian in March of this year. No pressure, Waymo.

The company made a strong case Tuesday morning at Google I/O for how AI technology made its self-driving cars ready to hit the streets solo. But one of its strongest examples also showed how a self-driving car could intelligently scare the bejeezus out of a rider. It’ll be interesting to see how perceptions of safety work their way into the self-driving car experience.

Before we get to the scary driving incident, let’s look at Waymo’s side of the story. At the Tuesday morning keynote, CEO John Krafcik emphasized how advanced Waymo was compared to the competition. “Waymo is the only company in the world with a fleet of fully self-driving cars, with no one in the driver’s seat, on public roads,” he pointed out.

Krafcik highlighted the positive human experiences from Waymo’s Early Rider Project, where Phoenix, Arizona residents rode in self-driving test vehicles. Videos showed a mother and child, a pair of selfie-ready teens, and a sleepy man, among others, laughing, chatting, or even napping.

Google

Google

Waymo CEO John Krafcik highlighted Jim and Barbara, an elderly couple, as the kind of people who would benefit from the company’s self-driving cars.

Krafcik pointed to the real-world example of Jim and Barbara, an elderly couple that didn’t want to lose mobility even if they could no longer drive. “These are the people we’re building it for,” he stressed.

Waymo is serious: No driver, no minder

Waymo is committed to a driverless experience for its service. “A fully self-driving car will pull up,” Krafcik promised, “with no one in the driver’s seat, to whisk them away to their destination.”

As for safety, Krafcik explained how Google’s technology was a fundamental advantage for Waymo. “We can enable this future because of the breakthroughs and investments we’ve made in AI,” he said.

Krafcik cited a project from several years earlier, where researchers used Google’s powerful neural networks to reduce pedestrian detection errors from about 1 in 4 to about 1 in 400, in a matter of months. The company didn’t say what its error rate was currently, but it would obviously have to be significantly better than 1 in 400 by now to be ready for public roads.

Google

Google

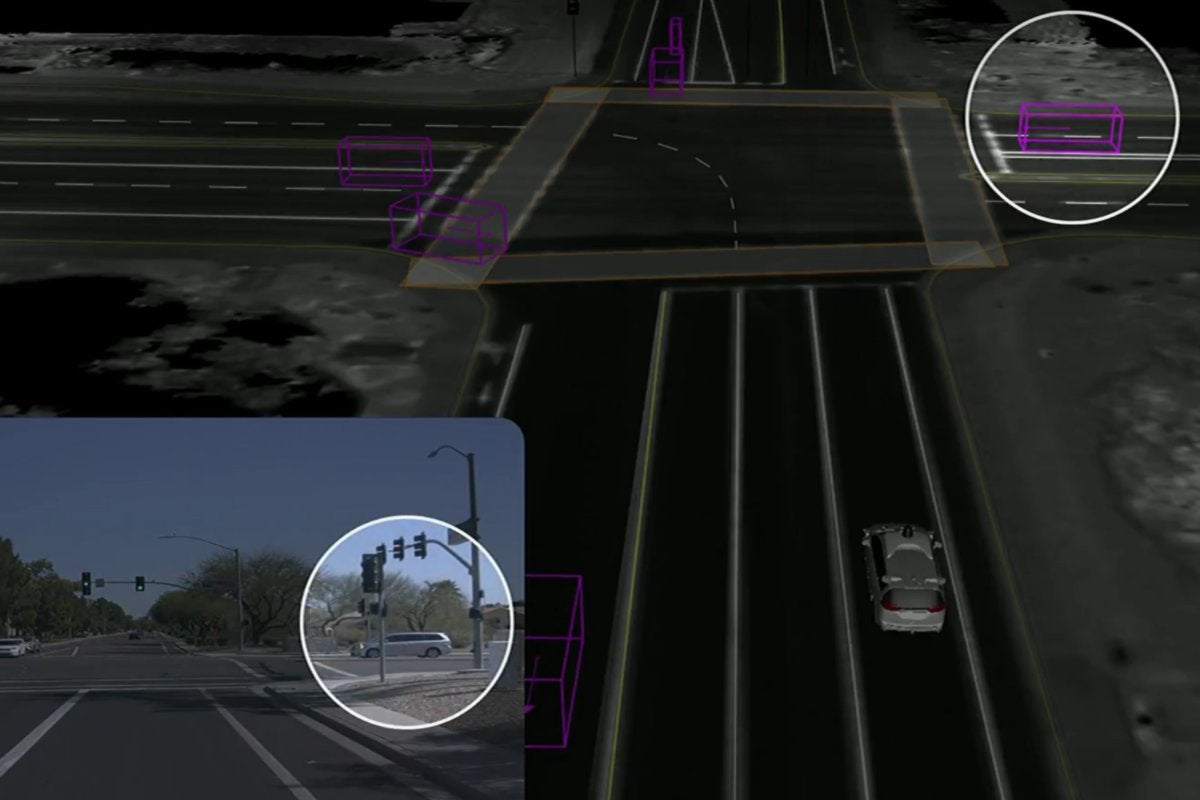

Waymo’s self-driving car successfully detected and avoided this car running a red light, by slowing down as it detected the car’s proximity.

Waymo executive Dmitri Dolgov took the stage with more examples of how AI trained self-driving cars. Here’s where the scary video comes in, as a Waymo test car successfully avoided a car that ran a red light right in front of it.

A schematic view showed how the self-driving car detected the red-light runner as it approached from the right side of the intersection.

Google

Google

Waymo executive Dmitri Dlogov showed how the company’s self-driving car saw a car speeding toward it from the right, even before that car ran a red light.

I think any human driver paying enough attention would come to a full stop at this point. The self-driving car instead calculated the speeding car’s path, slowed down enough to miss it, and kept moving—after all, it still had the green light.

Technically, the self-driving car made a safe choice, but imagine if you’d been riding in that self-driving car. You’d likely have felt a lot safer if the car had just stopped. I’d bet there were Early Rider Project videos we didn’t see, where riders were surprised by a self-driving move that seemed risky.

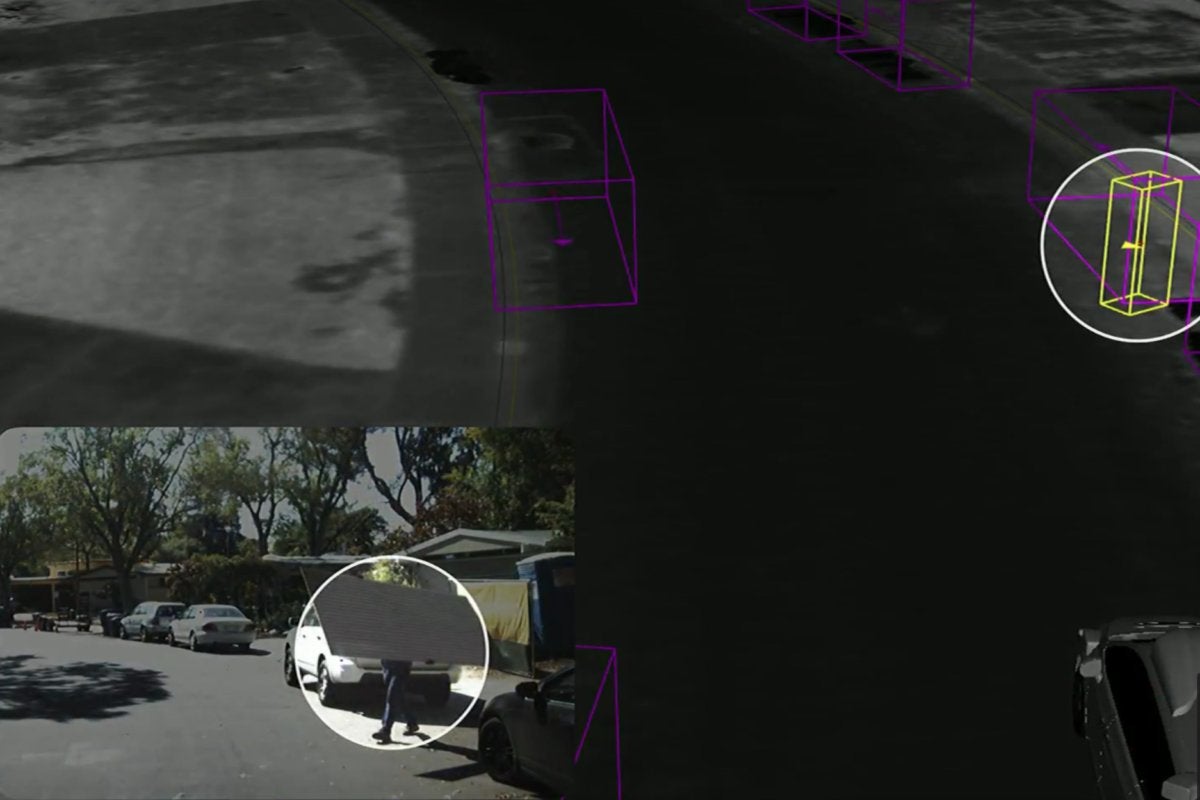

Yet there’s clearly a lot of safety built into Waymo’s cars. Dolgov talked about AI’s role in teaching the cars to detect pedestrians (a top-of-mind concern post-Uber). “Cars have lasers to measure distance and shape, and radars to measure their speed,” he explained. “By applying machine learning to this combo of sensor data we can detect pedestrians in all forms in real time.”

Google

Google

Waymo’s self-driving technology has been refined to it can detect pedestrians even if they are hauling plywood or wearing dinosaur costumes.

Dolgov showed how pedestrians obscured by large objects, such as a sheet of plywood, or bulky costumes, such as a dinosaur suit, were still picked up as pedestrians by Waymo’s self-driving cars.

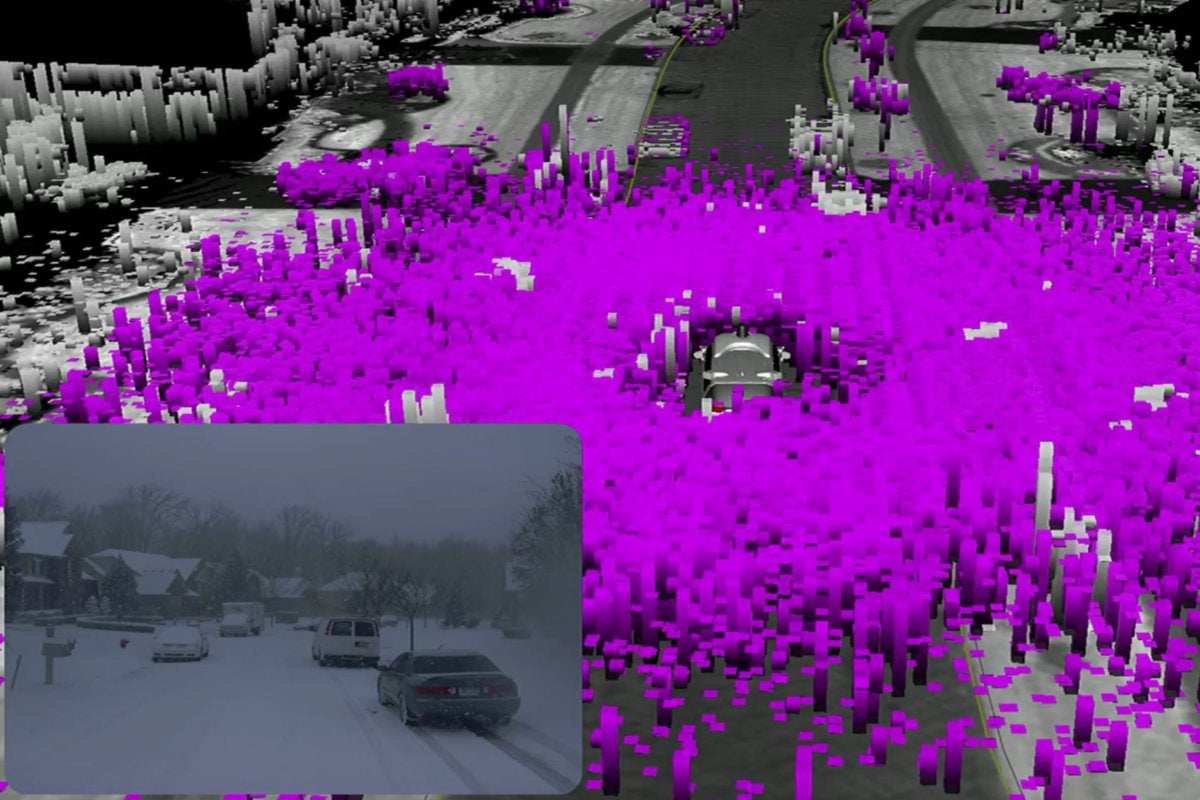

Waymo’s AI tackled another self-driving bugaboo: bad weather, where objects as small as raindrops or snowflakes can overwhelm the car’s sensors.

Google

Google

Waymo’s self-driving technology was once hampered by the overwhelming task of identifying bad-weather elements, such as every single snowflake in a snowstorm.

Waymo used machine learning to remove the snowflakes from the view of a car driving in a blizzard, so it wouldn’t lose sight of the important objects in its range.

Google

Google

Waymo uses Google’s deep neural networks to help it navigate even in bad weather. This image shows what the road looks like to a self-driving car after the driving snow is stripped from its sensor data.

Just don’t kill anyone, OK?

Waymo’s made significant strides in training cars to drive themselves, seeming to stay well ahead of other companies locked in the same race. It’s unlikely the company would start a public riding service unless it were very sure its cars were up to the task.

Its real advantage at this point is that the bar’s been reset. After Uber’s disaster, all Waymo’s cars have to do is not kill anyone. The rest is gravy. But riders have to feel safe as well as be safe, and self-driving cars, including Waymo’s, may still have some work to do in that department. ,