CPUs and GPUs are old news. These days, the cutting edge is all about NPUs, and hardware manufacturers are talking up NPU performance.

The NPU is a computer component designed to accelerate AI tasks in a power-efficient manner, paving the way for new Windows desktop applications with powerful AI features. That’s the plan, anyway.

All PCs will eventually have NPUs, but at the moment only some PCs have them. Here’s everything you need to know about NPUs and why they’re such a hot topic in the computer industry right now.

What is an NPU?

NPU stands for neural processing unit. It’s a special kind of processor that’s optimized for AI and machine learning tasks.

The name comes from the fact that AI models use neural networks. A neural network is, in layman’s terms, a vast mesh of interconnected nodes that pass information between them. (The whole idea was modeled after the way our own human brains work.)

An NPU isn’t a separate device that you buy and plug in (as you would with a GPU, for example). Instead, an NPU is “packaged” as part of a modern processor platform — like Intel’s Core Ultra, AMD’s Ryzen AI, and Qualcomm’s Snapdragon X Elite and Snapdragon X Plus. These platforms have a CPU along with an integrated GPU and NPU.

NPU vs. CPU vs. GPU: What’s the difference? Explained

For many years now, computers have been running tasks on either the central processing unit (CPU) or graphics processing unit (GPU.) That’s still how it works on AI PCs (i.e., computers with NPUs).

The CPU runs most of the tasks on the computer. But the GPU, despite its name, isn’t just for graphics and gaming tasks. The GPU is actually just optimized for a different type of computing task, which is why GPUs have been critical for non-gaming endeavors like mining cryptocurrency and running local AI models with high performance. In fact, GPUs are very good at such AI tasks — but GPUs are awfully power-hungry.

That’s where NPUs come into play. An NPU is faster than a CPU at computing AI tasks, but not as fast when compared to a GPU. The trade-off is that an NPU uses far less power than a GPU when computing those same AI tasks. Plus, while the NPU handles AI-related tasks, the CPU and GPU are both freed up to handle their own respective tasks, boosting overall system performance.

Why use an NPU instead of a CPU or GPU?

If you’re running AI image generation software like Stable Diffusion (or some other AI model) on your PC’s hardware and you need maximum performance, a GPU is going to be your best bet. That’s why Nvidia advertises its GPUs as “premium AI” hardware over NPUs.

But there are times when you want to run AI features that might be too taxing for a regular CPU but don’t necessarily need the top-tier power of a GPU. Or maybe you’re on a laptop and you want to take advantage of AI features but don’t want the GPU to drain your battery down.

With an NPU, a laptop can perform local (on-device) AI tasks without producing a lot of heat and without expending inordinate battery life — and it can perform those AI tasks without taking up CPU and GPU resources from whatever else your PC might be doing.

And even if you aren’t interested in AI per se, you can still take advantage of NPUs for other uses. At CES 2024, HP showed off game-streaming software that uses the NPU for video-streaming tasks, freeing up the GPU to run the game itself. By using the NPU’s extra computing power, the streaming software doesn’t take up any GPU resources… and it’s much faster than using the CPU for the same type of task.

But what can Windows PC software do with an NPU, really?

With an AI PC, the NPU can be used by both the operating system and the apps that reside on the system.

For example, if you have laptop with Intel Meteor Lake hardware, the built-in NPU will let you run Windows Studio Effects, which are AI-powered webcam effects that provide features like background blur and forced eye contact in any application that uses your webcam.

Microsoft

Microsoft’s Copilot+ PCs — the first wave of which are powered by Qualcomm Snapdragon X chips — have their own AI-powered features that use the NPUs built into that platform. For example, the Windows Recall feature that Microsoft delayed will require an NPU.

Related: What is a Copilot+ PC? Explained

In November 2024, AMD and Intel PCs will get access to those same Copilot+ PC features that were previously exclusive to Qualcomm PCs, but only AMD and Intel PCs that have the new AMD Ryzen AI 300 series and Intel Core Ultra Series 2 (Lunar Lake) processors.

And those are just the features built into Windows; app developers will also be able to use the NPU in a variety of ways. Don’t be surprised to see plug-ins for Audacity and GIMP that offer AI-powered audio and photo editing that’s powered by a computer’s NPU.

The possibilities are endless, but it’s still early days for the hardware so it’ll be a while before its full potential is realized.

Why do I need an NPU if my PC can already run AI software?

Most current applications that have AI features — including Microsoft’s Copilot chatbot — don’t utilize an NPU yet. Instead, they run their AI models on faraway cloud servers. That’s why you can run things like Microsoft Copilot, ChatGPT, Google Gemini, Adobe Firefly, and other AI solutions on any device, whether an old Windows PC, a Chromebook, a Mac, an Android phone, or something else.

Adobe

But it’s expensive for those services to run their AI models on the cloud. Microsoft spends a lot of money churning through Copilot AI tasks in data centers, for example. Companies would love to offload those AI tasks to your local PC and reduce their own cloud computing expenses.

Of course, it’s not just about cost savings. You also benefit from the ability to run computationally heavy AI tasks on your local device. For example, those AI features will still work even when you’re offline, and you can keep your data private instead of uploading it all to cloud servers all the time. (That’s a big deal for companies, too, who want to maintain control over their own business data for privacy and security reasons.)

Which NPUs are available and how powerful are they?

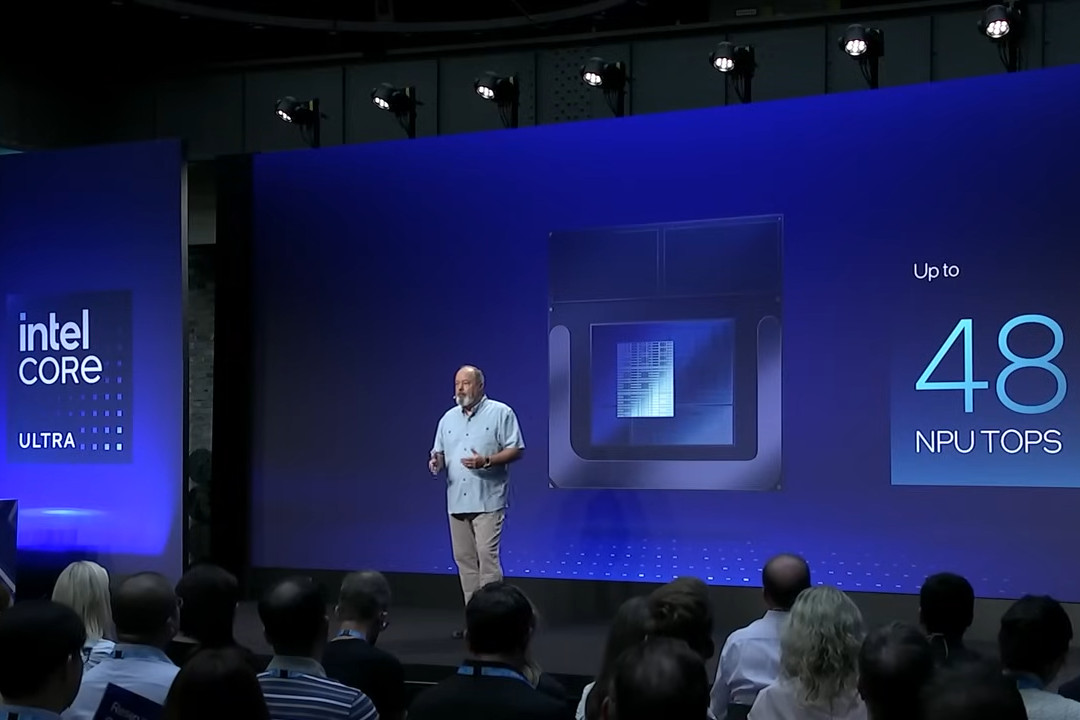

Intel

NPU performance is measured in TOPS, which stands for trillion operations per second. To give you a sense of what that means, a low-end NPU might only be able to handle 10 TOPS whereas PCs that qualify for Microsoft’s Copilot+ PC branding must handle at least 40 TOPS.

As of fall 2024, the following NPUs are available:

- Intel Core Ultra Series 1 (Meteor Lake): Intel’s first-generation Core Ultra NPU can deliver up to 11 TOPS. It’s too slow for Microsoft’s Copilot+ PC features, but it does work with Windows Studio Effects and some third-party applications.

- Intel Core Ultra Series 2 (Lunar Lake): Intel’s Lunar Lake chips will include an NPU with up to 48 TOPS of performance, exceeding Copilot+ PC requirements.

- AMD Ryzen Pro 7000 and 8000 Series: AMD first delivered NPUs on PCs before Intel did, but the NPUs in Ryzen 7000 series processors are too slow for Copilot+ PC features, with up to 12 and 16 TOPS of performance.

- AMD Ryzen AI 300 Series: The NPUs in the latest AMD Ryzen AI 300 series processors can deliver up to 50 TOPS of performance, more than enough for Copilot+ PCs.

- Qualcomm Snapdragon X Elite and Snapdragon X Plus: Qualcomm’s Arm-based hardware includes a Qualcomm Hexagon NPU that’s capable of up to 45 TOPS.

It’s worth noting that NPUs aren’t just on PCs. Apple’s Neural Engine hardware was one of the first big splashy NPUs to get marketing attention. Google’s Tensor platform for Pixel devices also includes an NPU, while Samsung Galaxy phones have NPUs, too.

Bottom line: Should you get a PC with an NPU right now or wait?

Honestly, it’s risky being on the bleeding edge.

If you went out of your way to get a Meteor Lake laptop in hopes of future-proofing your PC for AI features, you actually got burned when Microsoft later announced that Meteor Lake NPUs were too slow for Copilot+ PC features. (Intel disagreed, pointing out that you still get all the features those laptops originally shipped with.)

The silver lining to that? Copilot+ PC features aren’t that interesting yet. And especially with the delay of Windows Recall, there just isn’t much remarkable about Copilot+ PCs. Most of the biggest AI tools — ChatGPT, Adobe Firefly, etc. — don’t even use NPUs at all.

Still, if I were buying a new laptop, I’d want to get an NPU if possible. You don’t actually have to go out of your way to get one; it’s just something that comes included with modern hardware platforms. And those modern processor platforms have other big features, like big battery life gains.

On the other hand, those fast NPUs are only on the latest laptops right now, and you can often find great deals on older laptops that are nearly as fast as the latest models. If you spot a previous-generation laptop that’s heavily discounted, it may not make sense to shell out tons more cash just for an NPU, especially if you don’t have any AI tools you plan to run.

As of this writing, most big AI tools still run in the cloud — or at least offer that as an option — so you’ll be able to run them on any Windows laptop, Chromebook, or even Android tablets and iPads.

For desktops, the NPU situation is different. Intel’s desktop CPUs don’t have NPUs yet. You could hunt down an AMD desktop chip with a Ryzen AI NPU, but AMD’s Ryzen 7000 and 8000 series chips don’t support Copilot+ PC features anyway. So, if you’re putting together a desktop PC, set aside the NPU for now. It just isn’t that important yet.

Looking for a PC with a high-performance NPU? Consider Arm-based Windows laptops like the Surface Laptop 7 and AMD Ryzen AI 300 series laptops like the Asus ProArt PX13. Plus, stay tuned for our reviews of Lunar Lake-powered Intel laptops when they arrive. Until then, catch up with all the AI PC jargon you need to know.

Further reading: Key things to know before buying a Copilot+ laptop